The Moment of Change for Neuromorphic Computing Systems

Today marks the end of a grueling but exciting six weeks since we launched Knowm Inc. I have spoken with dozens of analysts and reporters and criss-crossed the country. We introduced Knowm Inc. and our neuromemristive technology stack just prior to the Semicon West trade show. We delivered the SENSE Server and our new program kick-off presentation to our sponsors at Air Force Research Labs in Rome NY. We met Dr. Leon Chua, the theoretical inventor of the memristor, at the 2015 IEEE 58th International Midwest Symposium on Circuits and Systems (MWSCAS) in Ft. Collins, Colorado, where he was keynote presenter. We were thrilled to give him two of our new BS-AF-W memristors. We wrapped things up at the Karles Invitational Conference on Neuroelectronics in Washington DC, where we watched presentations by the leading-edge researchers in brain imaging, interfacing, and emulation. We have spoken with many people, answered a lot of questions and corrected a few mis-conceptions. It is has been an intense few months to put it mildly. The general feeling is one of slight dis-association, almost as if we are living in a book. All of us at Knowm Inc. will remember these days for the rest of our lives. There is one moment, however, that stands above all the others. A moment when I knew that the constraints that previously dictated the design of computing systems, particularly neuromorphic computing systems, have undergone a rather dramatic and irreversible change. A moment when I became instantly aware that the rules of the game have changed.

Dreaming About Data

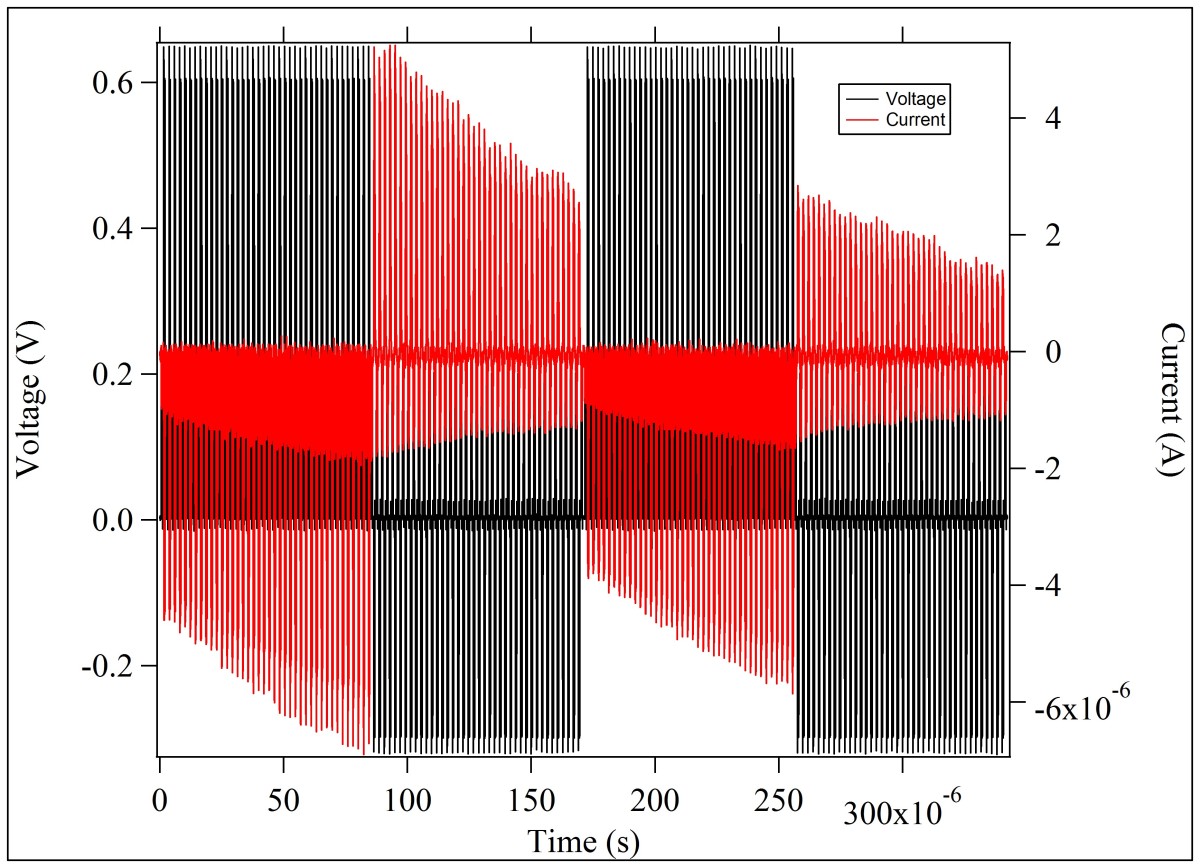

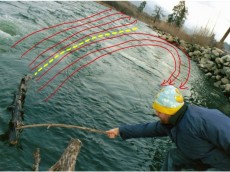

I had taken a rare day to be with friends and tube down a stretch of the Rio Grande. It was a day of relaxation sandwiched between long stretches of what has become a normal high level of intense, high-consequence work. About a week before, I had been talking with Knowm collaborator Dr. Kris Campbell, the inventor of Knowm’s BS-AF-W memristors. I had requested some data, and I was nervously awaiting its arrival. On that wonderful sunny day I was driving out of the Rio Grande Gorge canyon and my cell phone reception came back on-line, initiating a stream of notifications. One of those notifications was for an email with an attachment. It is hard to convey just how consequential this data was to me, but suffice it to say that I have previously dreamt about it. The only difference between the plot of data I was looking at on my phone and my dream was that, in my dream, the data was animated. To understand what this data means, let me digress.

Image by ▓▒░ TORLEY ░▒▓

The Search for a Memristor with Incremental Resistance States

I have given up the last dozen years of my life to the pursuit of what I have called “physical neural networks“. Some call it “analog” or “neuromorphic”, but neither one of these words gets to heart of what I mean. Some call it “wetware“. I call it a chip that can truly learn–not through computation but because it is intrinsically adaptive. Indeed the words that we use to describe computation do not even allow for it! It is not “Hardware” nor is it “Software”. What I have been searching for, crudely, is a form of “Soft-Hardware”. This new computing substrate is possible through a new device we call a “memristor”–what Dr. Leon Chua recognized as a fundamentally new type of electronic circuit element back in 1971.

What we have been searching for was not just any memristor though. We have been looking for something rather specific. Indeed, when Tim and I published the theory of AHaH Computing we specified precisely what we were looking for:

Based on our current understanding, the ideal device would have low thresholds of adaptation (<0.2 V), on-state resistance of ~100 kΩ or greater, high dynamic range, durability, the capability of incremental operation with very short pulse widths and long retention times…

—AHaH Computing–From Metastable Switches to Attractors to Machine Learning

Learning algorithms work through a process of iterative refinement of the value of ‘weights’ or ‘synapses’. When a learning network makes a mistake, signals are generated that inform the synapse how to change to reduce or eliminate the error. Like people, learning algorithms learn from their mistakes. The field of Machine Learning (ML) is essentially about understanding how to make adjustments to these weights to accomplish some objective. In almost every machine learning algorithm or program, there lies an equation that looks something like this:

In other words, the weight is nudged a bit. Sometimes it’s nudged in a positive direction. Sometimes it’s nudged in a negative direction. Sometimes it’s a big nudge. Sometimes it’s a small nudge. Most of machine learning boils down to understanding how to nudge weights around.

Image by jDevaun.Photography

So it makes sense that if we were to build a new type of self-learning hardware that contained hundreds of thousands of billions of constantly adapting weights, as biological brains do, it would enable us to adapt weights really efficiently. Memristors fit the bill for what we are looking for, but it’s important to understand that not all memristors are the same.

Memristors fit the bill for what we are looking for, but it is important to understand that not all memristors are the same.

The space of possible memristors is enormous and most of them don’t work exactly how we want them too. Some are too stochastic (random). Some do not switch quickly enough, or they switch too fast. Some are not bi-directional (meaning that we can’t controllably increase and decrease the resistance via the applied voltage direction). Many memristors are not incremental, meaning that their resistance can only be changed in large steps. Others are only incremental in one direction and will abruptly change in the other direction. Still others will stop working after only a few thousand switching events. Indeed, when one stops theorizing and goes out to find a suitable memristor–things get very difficult very quickly!

Over a year ago, as the AHaH Computing manuscript was making its way through a contentious peer-review process, I had communicated to Dr. Campbell what I was looking for. She said to me, rather surprisingly, “Yeah I think I can do that”. After so many years of seeing devices that failed in some way or another and watching massive companies and research organization fall short, I was pleasantly surprised if not a little skeptical. About a year later, on this special day driving out of the Rio Grande Gorge, I was staring at the data that demonstrated Dr. Campbell had delivered exactly what she said she would. Through numerous discussions with her since our first meeting I have discovered that she is probably the single most under-appreciated scientist in this field. Once you meet her, its not hard to understand why. She is more interested in making discoveries in the lab and teaching her students than she is in promoting herself.

Beyond the conceptual problems of understanding how to design learning processors around memristors, the first step toward addressing the adaptive power problem is a bi-directional voltage-dependent incremental memristor with high cycle endurance and modest or high non-volatility (the resistance state does not decay too quickly). By making the nudge magnitude a function of the voltage, we attain the ability to affect the size of the nudge. By reversing the direction of the voltage, we can change the direction of the nudge — positive or negative. That, in a nutshell, is what we have been looking for in a memristor, and that’s why I will forever remember the moment I first saw the data from Dr. Campbell.

This data shows the current and voltage across our BS-AF-W memristors, available in our web store. As equal-voltage pulses are applied, the current increases if the voltage is positive and decreases when the voltage is negative. In other words, we can nudge the resistance around. A careful look at the magnitude of the current and voltage reveals a resistance of about 100 kOhms. Last year we asked for a memristor with a “low threshold of adaptation (<0.2 V), on-state resistance of ~100 kΩ or greater, high dynamic range, durability, the capability of incremental operation with very short pulse widths and long retention times”. As they say, be careful what you wish for — because you just might get it.

A Historic Moment in Memristor Technology

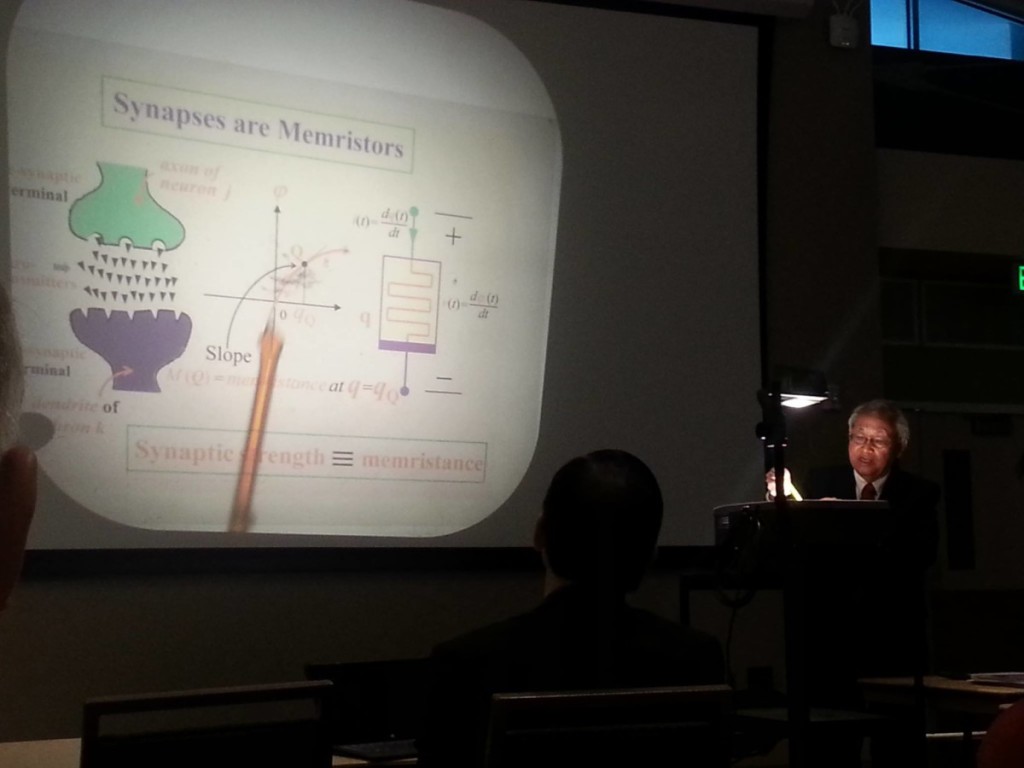

Impressed with Dr. Campbell’s work, Dr. Leon Chua (the theoretical inventor of the memristor), invited her to demo the devices following his keynote presentation at the 2015 IEEE 58th International Midwest Symposium on Circuits and Systems. I was lucky enough to be there and captured the event on my cell phone.

Understanding the Implications

Intelligence is inextricably linked to learning, and learning is a memory-processing operation. Indeed, a quick search for the definition of intelligence will yield something like this:

Intelligence: (Noun) The ability to acquire and apply knowledge and skills.

The ‘ability to acquire and apply knowledge‘ sounds an awful lot like learning to me. It makes sense that, if we wanted to create a powerful intelligence, we would want a technology that radically reduces the power (Joules per second) and space (volume) required to run it. By eliminating the energy associated with computing adaptation we can create the world’s most energy efficient learning systems. Given two learning systems with equivalent primary performance benchmarks, the more energy, space and weight efficient system will win. Just consider two autonomous robots competing or fighting each other. The one that is able to pack more adaptive synapses into the same space will likely win, as that system will be able to process more sensory streams (sensors) and evaluate more options (strategize or plan). If mobilized, it will have greater range or be capable of smaller platform deployments. The first country to robustly solve the adaptive power problem, as it relates to general purpose machine learning, will have extraordinary commercial and military advantages. Indeed, some philosophers like Nick Bostrom have gone beyond the short-term implications and started theorizing about the risks of Artificial General Intelligence. It is as clear to him as it is to me:

It now seems clear that a capacity to learn would be an integral feature of the core design of a system intended to attain general intelligence, not something something to be tacked on later as an extension or an afterthought. –Nick Bostrom, “Superintelligence: Paths, Dangers, Strategies”

Further Reading

- The Trouble with Oxide-based Memristors: http://knowm.org/the-trouble-with-oxide-based-memristors

- Knowm Inc.’s BS-AF-W Memristors: http://knowm.org/product/bs-af-w-memristors/

- Dr. Kris Campbell’s page on knowm.org: http://knowm.org/teams/kris-campbell/

- The Story of My Memristor – Kris Campbell http://knowm.org/the-story-of-my-memristor-kris-campbell/

- Knowm Collaborates with Kris Campbell at BSU: http://knowm.org/knowm-collaborates-with-kris-campbell-at-bsu/

- How to Build the Ex-Machina Wetware Brain: http://knowm.org/how-to-build-the-ex-machina-wetware

- The Generalized Metastable Switch Memristor Model: http://knowm.org/the-generalized-metastable-switch-memristor-model

- The Problem is Not HP’s Memristor–It’s How They Want To Use It: http://knowm.org/the-problem-is-not-memristors-its-how-hp-is-trying-to-use-them/

Leave a Comment