Is there something else out there?

At a fundamental level, everything in the Universe originates from natural processes of self-organization. At the lowest levels we see simple and highly deterministic processes or ‘rules’ that guide the time-evolution of matter and energy. We call these rules the ‘laws of physics’. Physics–and more broadly the pursuit of science–has been a remarkably successful methodology for understanding how the gears of reality turn. We really have no other methods–and based on humanity’s success so far we have no reason to believe we need any.

Image by Hubble Space Telescope / ESA

Our understanding of the Universe is accelerating, and will very likely continue to do so. This process is not perfectly smooth of course. We tend to fall into traps where the pursuit of science entangles with the pursuits of business and ego. For the ideas of Quantum Mechanics (QM) to flourish, for example, the old practitioners of classical mechanics had to (literally) retire. This begs the question: If the revolution brought forward by QM could only occur after the practitioners of the preceding paradigm faded into retirement (a nicer way of saying they died)–is there something else out there? What happens when the modern practitioners of physics release their grip? Will something else emerge? Yes, I believe that it will.

Searching for answers in the void

There is an absurdity to mainstream physics that I must point out. I first noticed it as an undergraduate physics major after my introduction to QM, quantum computing (QC) and black holes. Much has been written about these topics, and over and over again we are told how important they are. (Before you gather the angry mob, let me say that I agree-they are important.) We are told that a unification of physics is just within reach if we could only marry the physics of gravity with the physics of QM. Black holes are both proven to exist and appear to be the place where the physics of large-scale things (gravity) meets the physics of the very small (quantum mechanics). If we could marry gravity and quantum mechanics to create a unified theory of quantum gravity–then we would understand it all! Makes perfect sense, right?

Image by NASA Goddard Photo and Video

Quantum computing arises from the strange idea that a particle (or more generally a physical state), if left to total isolation, does not act like a particle but rather spreads its tendrils out through space in all possible paths with some probability given by its wave-function. Even stranger than this, if the particle (a “qubit”) interacts with another particle–but otherwise does not interact with anything else, the two become “entangled” and these strange tendrils explore a joint configuration space together. The combined ridiculousness and potential power of this property was not lost on Nobel Laureate physicist Richard Feynman, the inventor of QC, who famously said “I think I can safely say that nobody understands quantum mechanics.”

Image by tlwmdbt

Feynman realized that it is theoretically possible to exploit this property of Nature by forcing those ‘tendrils’ to explore and interact over all possible paths and massively speed up some types of computation. Sounds amazing, of course–and theoretically it is. But it is also practically insane because Nature abhors qubits. Everything in the world around you, including you, is the result of quantum decoherence–the act of qubits collapsing–which is exactly what you do not want in a QC, since the whole enterprise rides on one exploiting entanglement long enough to search some combinatorial space. It’s like trying to balance a few dozen needles on their tip in a car racing down a bumpy dirt road. The structure of the Universe is literally coming into existence via the act of quantum decoherence–and it is this process that QC is trying get rid of! Hence, QC is one of the hardest engineering tasks one could possibly dream up because it is literally fighting a fundamental process that gives rise to reality as we know it. If it turns out that QC is practically possible then it is a profoundly ingenious idea. On the other hand, it is also possible that it was a mistake. One could say that QC currently exists in a superposition of brilliance and absurdity and its wave-function has yet to collapse. The grandfather of quantum mechanics, Erin Schrödinger, was perfectly aware of this absurdity.

Schrödinger did not wish to promote the idea of dead-and-alive cats as a serious possibility; on the contrary, he intended the example to illustrate the absurdity of the existing view of quantum mechanics.

To illustrate it, he concocted a thought experiment with a cat in a box. Schrödinger did not wish to promote the idea of dead-and-alive cats as a serious possibility; on the contrary, he intended the example to illustrate the absurdity of the existing view of quantum mechanics. In an ironic twist, Schrödinger’s Cat is now used to teach the principles of quantum mechanics rather than illustrate its embarrassing absurdity.

Image by Laganart

Alas, Schrödinger’s cat is not the absurdity I want to bring to light. It is this: We are led to believe that the apex of physics is to understand the stuff we cannot touch (qubits) and the places we cannot go (black holes) while ignoring life itself. You cannot, by definition, hold a black-hole or a qubit in your hand. You can’t, by definition, directly measure or probe them. We tell ourselves that we can understand Life, the Universe, and Everything if we could only understand those things we cannot directly measure while steadfastly ignoring Life itself. It’s totally absurd! We can understand the physical mechanisms of Life via the same intellectual tools and techniques that we have used to understand everything else.

We tell ourselves that we can understand Life, the Universe, and Everything if we could only understand those things we cannot directly measure while steadfastly ignoring Life itself. It’s totally absurd!

I have heard some say that nothing useful would come of directing the modern physics apparatus toward life. Worse yet, people actually snicker, as if the idea is inherently mis-guided or out of bounds. On the contrary, Life is the force that creates technology on Earth. Unlocking that power will transform humanity in ways we cannot even begin to fathom. Best of all, it is not out of reach! We can touch it and we can probe it. We are it, for goodness sake.

A Framework for a New Branch of Physics

“Artificial artifacts” are attributed to human creation, while “Natural artifacts” are attributed to natural evolution. The reality is that everything in the world is a result of matter configuring itself, including of course the technology that humans manufacture and the human brains that guide those processes. It’s all just matter configuring itself, i.e. “thermodynamic evolution”. There is no line between the natural and artificial worlds because humans are natural. The appropriate response to this statement in my opinion is “Duh”.

Physics can in large part be described as the mathematical accounting of energy over time. Objects in the physical world organize themselves to reduce potential energies. A rock rolls down the hill because it reduces its gravitation potential energy. A chemical reaction proceeds by lowering its chemical potential energy. Electronic circuits proceed because electrons move from regions of high to low potential energy. It is of course logical that the mechanisms that describe the thermodynamic evolution we see in Life are simply an accounting of energy within the area of dissipative structures. That is, a necessary and complete account of thermodynamic evolution can be attained from just another description of matter’s continual quest to reduce energetic potentials.

a necessary and complete account of thermodynamic evolution can be attained from just another description of matter’s continual quest to reduce energetic potentials.

The failure to identify living systems as thermodynamically self-assembling structures is in part a consequence of the Darwinian theory of evolution (ToE)–the most successful description of Life for the last two centuries. The ToE describes evolution as mutations or changes on the genotype (DNA code) and selection on the phenotype (“the body”), while neglecting the lower and higher levels of organizations. For example, the ToE does not answer the following questions: “How do molecules that form a cell self-organize into the collective cell?”, “How do the many trillions of cells that make up our bodies self-organize to form the body?”, “How do the individuals that make up a species self-organize into a society with individual specialization and division of labor?”, “How do the billions of neurons that make up a biological nervous system self-organize to form a brain and control a body within an environment?”. Although biological organization occurs at multiple levels, the ToE describes only two disconnected levels and one mechanism: a molecule that encodes a genotype, a body that encodes the phenotype, and the selection of configurations through time via reproduction and death.

Thermodynamic Evolution (TE) is responsible for the creation of structure from a homogeneous state. Within the physics vernacular, TE is responsible for “symmetry breaking”, where symmetry describes the high-entropy state where energy is evenly spread throughout the assembly. TE is built on the assumption that structure in the world exists for a precise purpose: the dissipation of free energy. “Survival of the fittest” may be reformulated to a more exact physical statement: Structure that is responsible for more free-energy dissipation is stable because it uses the energy to repair itself.

Structure that is responsible for more free-energy dissipation is stable because it uses the energy to repair itself.

Structure requires work to build and maintain it against the inevitable decay wrought by the second law of thermodynamics. Consider, for example, a primitive building constructed of dried mud bricks. It takes the dissipation of energy to assemble and repair the brick. Dirt and water must be mixed with straw that must be grown. Molds must be formed and the bricks dried. The bricks must then be lifted against the gravitational potential into a non-homogeneous configuration. The bricks degrade over time from exposure to wind and water. Without constant repair, the building will dissolve back into the homogeneous state from which it emerged. The repair of the mud structure is directly linked to its ability to dissipate energy within the world.

Image by archer10 (Dennis) 90M Views

For example, the structure’s inhabitant (also a volatile structure), may use the structure as a residence. If the structure succeeds in protecting the inhabitant from the degrading effects of the environment then the inhabitant will be better able to conserve energy, which may be directed toward the repair of the structure. On the other hand, if the structure fails to increase the inhabitant’s ability to dissipate energy, for example by requiring the inhabitant to spend more time on its repair than on obtaining food and resources (free energy), then the structure can be seen as participating in its own destruction (2nd Law). In the event of death or sickness of the inhabitant, the structure will decay back into the homogeneous state from where it came.

Consider the organization of a company. When a company is formed it survives at first (repairs its state) on the wealth of its founders or investors. Let us suppose that the company was ultimately a failure so that the internal energy reserves are depleted while no external wealth is harvested from the economy. The energy-dissipation pathway that is represented by the company is now damaged in that it is less likely that such a company will maintain its old state when a new investment round is acquired–No point in putting money into a losing venture. On the other hand, if the company does manage to harvest wealth then its structures (product or service-producing assets) will be repaired and expanded. Furthermore, it is certainly possible that the amount of wealth harvested is less than the amount of wealth invested in the company structure. In this case, if it is possible to shed internal structure so that the amount of wealth harvested exceeds or equals the amount of wealth invested in the projection of state, such decay will occur and the company will stabilize on the available wealth flow.

As another example, consider the actions of two lions, Bob and Charlie, that must search for food in a hostile environment. Suppose that at some time both animals are located in the same position P0, and that two alternate watering holes are available, W0 and W1, where prey can be found.

Image by Azhar Khan (akwildshots)

Watering hole W0 is separated by a greater distance such that more energy must be expended to reach it, but the odds of finding prey are higher. Let’s suppose that the evaluation of Bob’s state causes him to walk to W0, while the evaluation of Charlie’s state causes him to walk to W1. In this example, each lion is damaging its state as it is evaluated. The more energy the lions expend without attaining energy from the environment, the closer they are to death. If it is the case that Bob’s state enabled him to attain energy while Charlie’s state did not, Bob will be selected in the sense that his state will be stabilized. Charlie, unable to find free-energy to repair his state, will succumb to decay (death). Note that the selection of Bob occurs because he succeeded in dissipating more energy from the environment (finding the prey). Survival of the fittest is accurately reframed as stabilization of successful energy dissipating pathways.

Survival of the fittest is accurately reframed as stabilization of successful energy dissipating pathways.

As the path connecting P0 ad W0 is traversed many times, a trail (in the ground) formed. This trail guides Bob while also making the journey easier by removing obstacles along the way (rocks, plants, etc). Not only has the structure of the lion been selected, but the physical trail that connects the lion’s food source is now stabilizing. At all levels, matter configures itself for dissipating energy.

I am not the only one to say such things! I am just another thinker in a long list of thinkers who are jumping up and down in excitement and pointing in the same (rather specific, to be blunt) direction. This has been going on for at least a century. Here are some:

“The device by which an organism maintains itself stationary at a fairly high level of orderliness consists of continually sucking orderliness from the environment.” -Erwin Schrodinger

“A system will select the path or assembly of paths out of available paths that minimizes the potential or maximizes the entropy at the fastest rate given the constraints.” -Rod Swenson

“The path maximizing time derivative of exergy under the prevailing conditions will be selected.” -Sven Jorgensen

“For a finite-size system to persist in time (to live), it must evolve in such a way that it provides easier access to the imposed currents that flow through it.” -Adrian Bejan

“Intelligence is a force, F, that acts so as to maximize future freedom of action.” -Alex Wissner-Gross

“Dissipation-driven adaptation of matter” -Jeremy England

“Free energy dissipation pathways competing for conduction resources” -Alex Nugent

Note: If you are reading this and know of somebody else who has made similar statements, please leave a comment below!

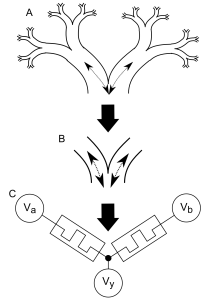

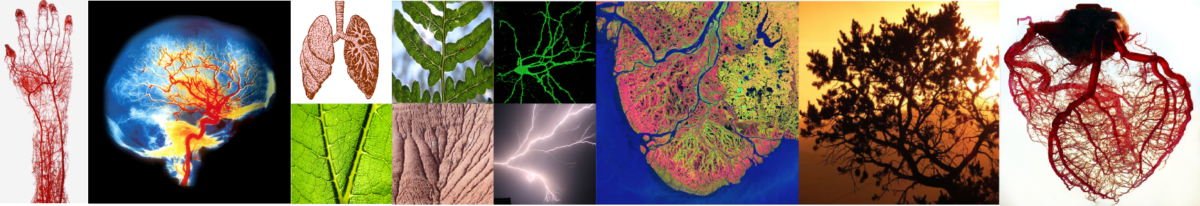

My statement stems from our work with AHaH Computing. When two energy-dissipating pathways compete for conduction resources, a Knowm synapse (aka kT-Bit) will emerge and it can be shown that the pair maximizes power dissipation while driving Hebbian or Anti-Hebbian learning. We see this building block for self-organized structures throughout Nature, for example in arteries, veins, lungs, neurons, leaves, branches, roots, lightning, rivers and mycelium networks of fungus.

Memristive Synapse

We observe that in all cases there is a particle that flows through competitive energy dissipating assemblies. The particle is either directly a carrier of free energy dissipation or else it appears to gate access, like a key to a lock, to free energy dissipation of the units in the collective. Some examples of these particles include water and sugars in plants, ATP in cells, blood in bodies, neurotrophins in neurons, and money in economies. In the cases of whirlpools, hurricanes, tornadoes and convection currents we note that although the final structure does not appear to be built of competitive structures, it is the result of a competitive process with one winner; namely, the spin or rotation. In other words, a hurricane is a ‘collapsed kT-Bit’.

We see the same self-organizing building blocks form at all scales, from cells to rivers to us. Energy dissipation pathways competing for conduction resources.

A Framework for a New Branch of Computing

New understanding in physics should directly translate to innovations of technology. This is arguably the defining characteristic of “successful”. Quantum mechanics gives us understanding about how Nature works within some bounds and consequently enables us to build powerful technology. Would an understanding of thermodynamic evolution allow us to build powerful technology?

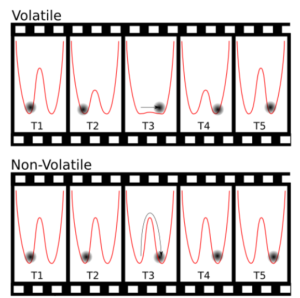

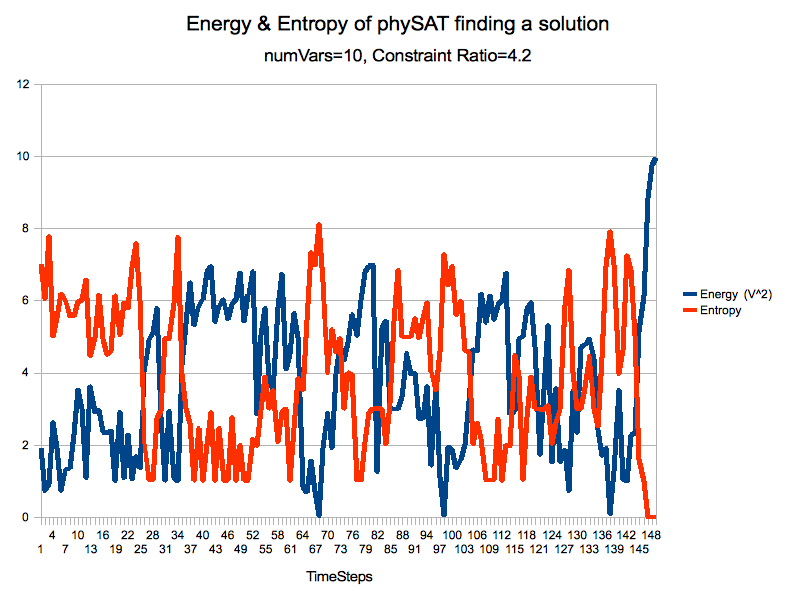

The more resistant a structure is to decay, the more energy is required to configure it. A house built of large carved stones is more resistant to decay but requires a great deal more energy to form. The same is true of electronic memory elements. The ability of a memory bit to hold its configuration against background energy fluctuations is dependent on the state’s potential energy barriers. The higher these barriers, the more energy must be dissipated to configure the bit. If the bit’s barrier potential is the result of a self-repairing process, we have a mechanism that can be used to to self-configure solutions to constraint problems.

Self Configuration of a Thermodynamic Bit

A good illustration of this comes from simulations I did with Todd Hylton during the SyNAPSE program. The idea is to define a new type of bit, which we called a “thermodynamic bit” or kT-bit for short (a single synapse AHaH node is one implementation of a kT-bit). The bit has a binary state, which mutates according to some probability. This probability is a function of how much energy it can harvest from its environment, which we represent by boolean constraint clauses. If the bit’s state satisfies all of its clauses then it gets energy, which it dissipates in the process of repairing its state. Consequently, if it can repair its state then it achieves stability, otherwise it starts to ‘flip-flop’. We formulated the kT-Bit as an RC circuit, where the charge on the capacitor created a voltage that affected the probability of the state transition:

is the charge of an electron,

is Boltzmann’s constant,

is the temperature,

is the voltage on the capacitor

, which charges and discharges though resistor

. If the kT-Bit’s state satisfies all of its clauses, the feedback voltage

is set to some positive value, otherwise it is set to “0”.

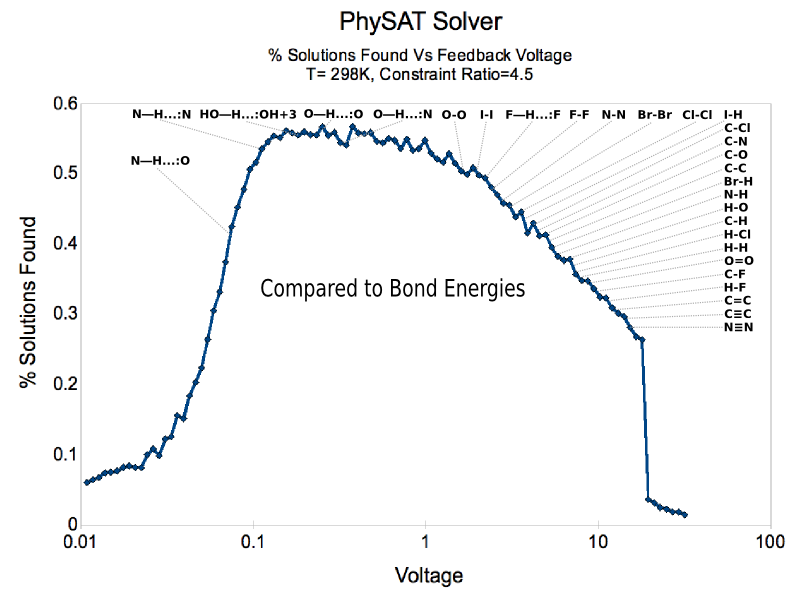

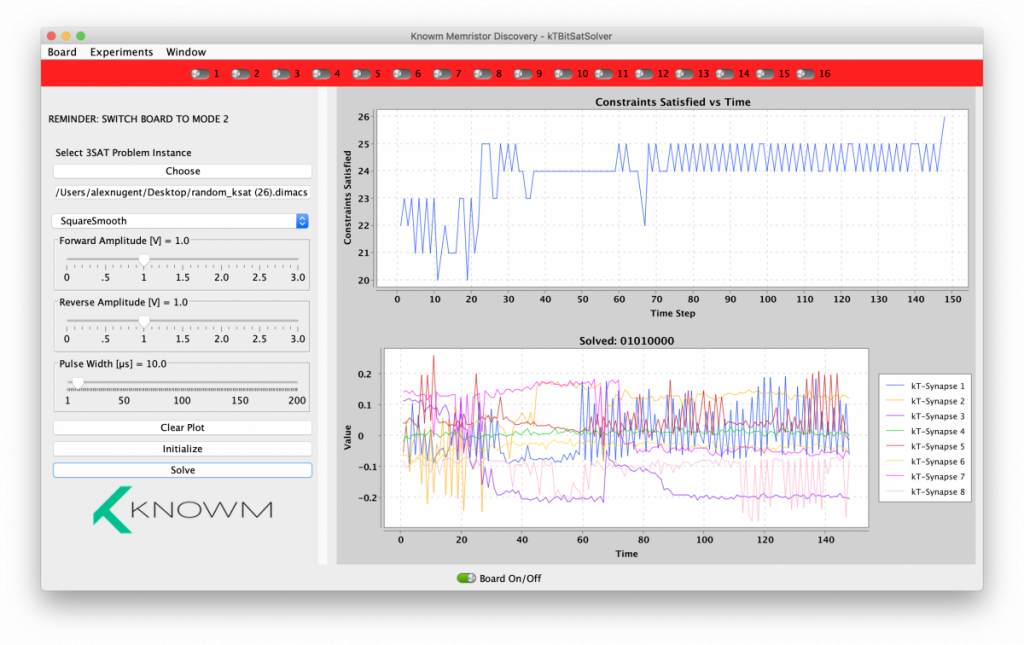

We decided to study the 3-Satisfiability (3SAT) problem, as it is a poster-child of the NP-Complete problems, and we called our creation “PhySAT”, short for “Physical-SAT”.

3SAT Problem Interpreted with kT-Bits

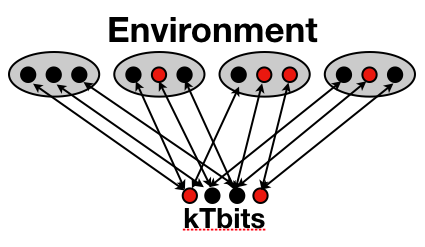

Given some constraints, the kT-Bits will evolve spontaneously to satisfy the constraints. The only stable solution is when all constraints are satisfied. Given some random problem, we can track the energy and entropy of the kT-Bits as they configure to solve the problem:

Evolution of kT-Bit Energy and Entropy while solving a typical 3SAT constraint problem.

We found the behavior fascinating and reminiscent of the idea of self-organized criticality postulated by Per Bak. The kT-Bits would undergo periods of relative stasis as a single bit or small combination of bits were flipped, followed by avalanches where the whole population came unstuck. This is more easily seen with an animation I generated for the same technique used to solve a Sudoku puzzle:

From a benchmarking perspective I found PhySAT was competitive with some problem instances, but suffered from a very short-term memory. While PhySAT was not going to win us any competitions, it did give us a big surprise. I am still not sure if what Todd and I saw was a coincidence or a foretelling of something more profound. I leave it for you to decide. Todd had the idea of comparing the effectiveness of the solver at various drive voltages with the most common bond energies of life:

Thermodynamic 3SAT Solver and Bond Energies of Life

In 2011 Todd and I left our respective roles with DARPA and fell out of touch. After winning SBIR and STTR funding from AFRL, AHaH Computing became my sole focus. I extended the work to include another search mechanism that I called a “Strike Search”, which Tim and I described in the AHaH Computing paper. We applied this to the traveling salesman problem:

and non-convex optimization:

The robotic arm controller we developed as part of the AHaH Computing paper was another variation, where each kT-Bit was represented as a collection of multi-synapse AHaH nodes that controlled the movement of opposing muscles on multiple joints:

I do not wish to claim that the above demonstrations are important algorithmic achievements. However, they all point in a clear direction where energy and information processing have merged–where matter is allowed to configure itself and by doing so solves a problem. There is nothing, from a technological perspective, that is preventing humanity from building new processors that exploit thermodynamic evolution. Indeed, this is our goal at Knowm.

Closing Remarks

If Thermodynamic Computing is indeed a new frontier in computing I would fully expect that multiple people are having and will have the same or similar ideas, using different words and conceptual frameworks. As an example, the recent Memcomputing work of Massimiliano Di Ventra and Yuriy V. Pershin appears to be an independent discovery of similar concepts. Edward O. Wilson describes what I believe is occurring with Thermodynamic Evolution as the phenomena of Consilience, or “Literally a jumping together of knowledge by the linking of facts and fact-based theory across disciplines to create a common groundwork of explanation.” Lastly, I would like to express my feelings about the future, which consist of a mixture of excitement and trepidation. I believe that technology derived from thermodynamic evolution is going to be extremely powerful, and the consequences of what will become possible needs to be appreciated and prepared for.

Additional Resources

The Adaptive Power Problem

Rod Swenson’s Law of Maximum Entropy Production

Adrian Bejan’s Constructal Law

AHaH Computing

Alex Wissner-Gross’s Causal Entropic Forces

Jeremy England’s Lab

Robert Fry’s Physical Intelligence and Thermodynamic Computing

What is Knowm

Review of 2017 Energy Consequences of Information Conference

4 Comments

Carl Lumma

England’s work is based on Gavin Crooks’ nonequilibrium work relation (1999). Crooks and collaborators (especially Susanne Still) have many interesting publications in this area.

Wissner-Gross draws heavily (with acknowledgement) on the work of roboticist Daniel Polani (and collaborators), e.g. “Keep Your Options Open” (2008).

Eric Chaisson has proposed power density as a measurement of “cosmic evolution” and has authored several popular books on the subject.

An interesting model of the global economy as a thermodynamic system is due to atmospheric scientist Tim Garrett ( http://www.inscc.utah.edu/~tgarrett/Economics/ ).

Kjell Hansen

http://www.ler.esalq.usp.br/aulas/lce1302/life_as_a_manifestation.pdf

http://www.americanscientist.org/issues/id.6378,y.2009,no.2,content.true,page.1,css.print/issue.aspx

https://arxiv.org/ftp/arxiv/papers/0910/0910.2621.pdf

http://www.helsinki.fi/~aannila/arto/natprocess.pdf

http://www.mantlethought.org/international-affairs/life-process-not-thing

http://michaelscharf.blogspot.ca/2014/02/a-new-equation-for-intelligence-f-t-s.html

https://www.ncbi.nlm.nih.gov/pmc/articles/PMC3909994/pdf/fnhum-08-00020.pdf

Keep up the good work!

Alex Avramov

quote from “Order Out of Chaos”, by Ilya Prigogine and Isabelle Stengers

“Equilibrium thermodynamics provides a satisfactory explanation for a vast number of physicochemical phenomena. Yet it may be asked whether the concept of equilibrium structures encompasses the different structures we encounter in nature. Obviously the answer is no.

“Equilibrium structures can be seen as the results of statistical compensation for the activity of microscopic elements (molecules, atoms). By definition they are inert at the global level … Once they have been formed they may be isolated and maintained indefinitely without further interaction with their environment. When we examine a biological cell or a city, however, the situation is quite different: not only are these systems open, but also they exist only because they are open. They feed on the flux of matter and energy coming to them from the outside world. We can isolate a crystal,but cities and cells die when cut off from their environment. They form an integral part of the world from which they can draw sustenance, and they cannot be separated from the fluxes that they incessantly transform.”

Jacques de Gerlache

Very intersting systemic approach of complex systems;

Do you know the François Roddier’s book “Thermodynamics of Evolution” ?

https://www.francois-roddier.fr/blog_en/wp-content/uploads/2017/03/Thermodynamics_of_evolution.pdf

A more personal perspective (in French) :Dissipation, couples ago-antagonistes et complexité.

De la thermodynamique à la cancérogénèse, une approche des relations symbiotiques dissipatives.

http://www.res-systemica.org/afscet/resSystemica/vol11-Bernard-Weil/res-systemica-vol-11-art-02.pdf

and a “pedagogical tool” ; The principle of emergence ; https://www.youtube.com/watch?v=_WLYOYE8a5o

Truly yours,

Jacques de Gerlache

senior (eco)toxicologist