Intro to Machine Learning

“We do not receive wisdom, we must discover it for ourselves, after a journey through the wilderness which no one else can make for us, which no one can spare us” –Marcel Proust

Machine Learning is a subfield of artificial intelligence which aims to train intelligent machines through example. The approach has been very successful creating computer programs which can do things like drive cars, recognize written digits, and diagnose patients. However, the applications of Machine Learning are as diverse as human ability. That’s because we run on our own machine learning tool: the human brain. An interesting biological parallel which Machine Learning often takes advantage of.

Image by laszlo-photo

One of the attractions of Machine Learning aside from its broad application is its ability to solve many tasks by applying the same technique, this is because Machine Learning methods all “learn” in the same way. First a task is defined, categorizing handwritten digits for instance, examples are collected in a “training set”, and then a Machine Learning method is applied which uses the examples gathered to learn how do that task.

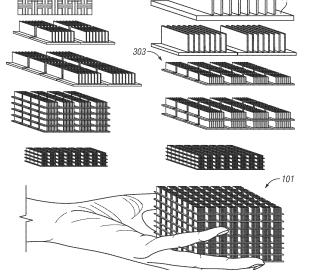

There is no single method in Machine Learning for learning from training examples; there are many. These range from highly complex, hundred million parameter CNNs down to the simple statistical techniques like linear regression. Choosing between techniques depends on many things. Generally, more complex methods perform better than shallow methods because they form deeper more complex understandings of the problem -and yet- require more energy and time resources to train – a trade off which makes deciding between methods problem specific. As we shall see AHaH Computing doesn’t play by the same rules, it benefits from being physically realizable on a chip and this allows us to build deep models which run in less time and with substantially less energy.

Image by naturalflow

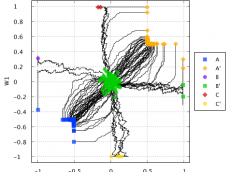

An important aspect of Artificial Intelligence and Machine Learning is our ability to test what we’ve trained. This is why it is important that we have withheld examples from the learning phase and later use these examples to test our model’s ability to generalize. For many of the problems you will try building a Machine Learning tool to solve you’ll often find a standardized set of examples which have been nicely split into training and testing. This gives us a way to see how the program we’ve trained compares to other methods on the same task. In the examples we will go through in this series we will be using the standardized datasets for each task so you can see how the Knowm API stacks up.

While evaluating your learner you also need to be aware of the relevant metrics you are using to evaluate it. When you build a model for a classification problem for instance (like deciding if a patient’s tumor is malignant of benign) you almost always want to look at the accuracy of that model as the number of correct predictions from all predictions made. However classification accuracy alone is typically not enough information to make a decision about the ability of your leaner and it is important to evaluate the primary metrics: Precision, Recall, Accuracy and F1.

It is also important to understand how your technique performed in speed and power efficiency. These secondary metrics are commonly considered less important to researchers but they become important when you intend to move your model into a real world environment when energy is limited and speed matters. More discussion about the importance of secondary metrics can be read about at The Adaptive Power Problem and How to Build the Ex-Machina Wetware Brain.

At Knowm Inc. we are focusing on these secondary metrics (while meeting or beating primary metrics) because they demonstrate the unique advantage our chips have over other Machine Learning tools. We believe this gives our future neuromemritive processors real world application and a market advantage over traditional von Neumann architectures. Furthermore, once these speeds become realized on physical kT-RAM there will be little restriction for developers to build arbitrarily deep (and thus more powerful) methods.

Leave a Comment