I recently made the pilgrimage to our local movie theater to view the latest ‘rise of the machines’ artificial intelligence flick. I always make a point of watching these movies (AI, Terminator, iRobot, Transcendence, etc), as I am interested in the divide between fantasy and realty when it comes to building intelligent machines. You see, for more than a decade I have been in the business of solving the very hard problem of learning processors that physically adapt. It is not hardware and it is not software. Its soft-hardware or, as it was called in Ex Machina, ‘wetware’. (The technical term is currently ‘neuromemristive’.) My journey has taken me to the edge of questions such as “what is life?”, and what I discovered fundamentally changed my perception of how the self-organized world works.

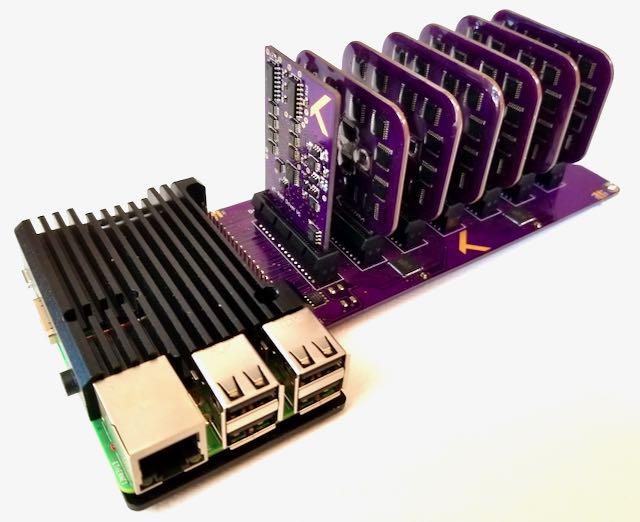

I have had the great opportunity to help launch and advise large government-sponsored programs to running my own programs to now, co-founder of Knowm Inc., on the cusp of commercializing a physical learning processor based on memristors that are now available in our web store. I am not the prototypical mad scientist inventor. I do not work for some large omni-present global technology corporation. I am not a billionaire playboy. I’m just a guy who realized there was a way to solve a very hard problem with major consequences if a solution was found…and I dedicated my life to doing it. Since I begun around 2001 I have had quite the journey. I have forged amazing friendships, inadvertently sparked a few skirmishes, and even made a few enemies and frienemies. I have navigated the beltway-bandits of DC with my flesh mostly intact, contracted with various branches of the DoD including DARPA, the Air Force and Navy. I have been contacted by spooks, both foreign (probably) and domestic (for sure). I am acutely aware of the problem, who is working on it, and perhaps more importantly how they think about it. I am aware of where reality gives way to fantasy. This is why I love AI flicks, and why I found Ex Machina so compelling. You see, I know how the neural processor they show in that movie could actually work.

Any good science fiction movie has at least one technology that must be accepted for the movies premise to make sense. In space travel this is the Faster Than Light (FTL) or Warp Drive. Without such a technology, the plot is not going to go anywhere–the whole movie would be stuck inside a metal tube adrift in empty space for thousands or millions of years. The FTL Drive makes it all possible. AI movies, by contrast, always have a special ‘learning processor’ from which the plot evolves. There is good physical reason for this, which I formally call the adaptive power problem. Without such a technology, AI is doomed to power efficiencies millions of times worse than biology. Ironically those closest to trying to solve the problem of AI often forget this fact, lost in a sea of mathematics and wholly oblivious to the real physical constraints of their math. Hollywood, oddly enough, consistently gets it right–at least in principle. If we want something like a biological brain–or better–it is not going to work like a modern computer. It is going to be something truly different, all the way down to its chemistry and how it computes information. Nothing short of a change in our understanding of what hardware can be will get us to the levels of efficiency of the brain–the original wetware.

In iRobot it was the positronic brain. In Terminator it was the Neural Net CPU. In Transcendence it was a quantum processor. Most interesting to me, in Ex Machina they called it “wetware” and it was built of a “gel” to “allow the necessary neural connections to form”. It was, I thought, a beautiful structure:

How would such a processor, as shown above, actually work? I was asking myself exactly this same question over a decade ago. The answer that I found changed the course of my life.

The basic problem I came up against was the efficient emulation of a biological synapse. Synapses have two properties that we need. First, a collection of synapses need to perform an integration over their values or weights. Second, the devices must change or adapt as they are used so they can learn. These two properties are called integration and adaptation. Integration is not all that difficult. Electronic currents sum easily, if a synapse were represented as a resistor or pair of resistors. The real problem is adaptation. One ‘solution’ is to ignore the problem and build a non-adaptive chip. While this may sound crazy, this is exactly what many in the neuromorphic community do simple because our current tools are so limited. Learning, or perhaps more generally adaptation or plasticity, enables a program to adapt based on experience to attain better solutions over time. An AI processor that cannot learn cannot be intelligent. Indeed, the problem is finding a way to make continuous adaptation an efficient operation and to make it available as a resource to our computing systems. What will occur when modern computing gains access to trivially-cheap and efficient perceptual processing and learning?

Particles in Suspension

I thought about the problem of building adaptive electronic synapses for about a year until I had a big epiphany. I was a Physics major at the time taking electricity and magnetism (E&M), which means my brain was recently exposed to something relevant to solving my problem. Electricity and Magnetism is all about understanding electric and magnetic fields–a study of Maxwell’s equations. You learn how materials behave when exposed to electric fields, for example a conductive particle. The electric field pulls at the charges in the material and, if they are free to move, causes the charge to separate and form dipoles:

Image by PSC1121-GO

The dipole, in turn, feels a force by the electric field. If the electric field is homogeneous, then the particles will act like little magnets and align the principle axis of the particle with the field. If the field is inhomogeneous, the particle will both align but also feel a force. My “aha!” moment occurred when I realized that this effect could be used to build electrically variable resistive connections.

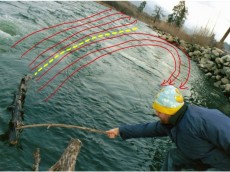

If you placed a bunch of conducting particles into a non-conductive liquid or gel and then exposed them to an electric field between two terminals, they should spontaneously organize themselves to bridge the divide. I hit the college research library to see if I could find anybody doing it. I discovered a whole scientific field, called dielectrophoresis (DEP), and study after study showing that what I was thinking was clearly possible. Everybody was looking at the DEP force as a mechanism to manipulate small particles but nobody was looking at ways to build functional electronic synapses. Only a few years later, papers like this started to be published:

Gold nanoparticles self-organizing a wire that bridges two electrodes. Kretschmer, Robert, and Wolfgang Fritzsche. “Pearl chain formation of nanoparticles in microelectrode gaps by dielectrophoresis.” Langmuir 20.26 (2004): 11797-11801.

Let me dwell on this idea a bit, because it is important. The more you look at the natural world the more you realize that there is something going on. Something that people rarely talk about, but nonetheless drives into the heart of how everything works. Nature is organizing itself to dissipate energy. For example, the human economy is a massive self-organizing energy-dissipating machine, sucking fossil fuels out of the ground and channeling the energy into the construction of all sorts of gizmos and gadgets. The structure that we see around us, both human and non-human made, is there because free energy is being dissipated and work is being done. Rather than everything decaying away according the the Second Law of Thermodynamics we see self-organized structure everywhere, and this structure is the result of energy dissipating pathways. Or, to be more direct, it is energy dissipation pathways. It is incredible if you think about it, and the fact that Nature will self-organize a wire from independent particles if a voltage (a potential energy difference) is present gets to the heart of what self-organization actually is: matter configuring itself to dissipate free energy.

Once you understand what is going on, you can start to understand how to harness it. Think about it. If a bunch of particles will spontaneously organize themselves out of a colloidal suspension to dissipate energy then what happens when we control the energy? What happens if we make the maximal-energy-dissipation solution the solution to our problem? Will the particles self-organize to solve our problem? The answer, it turns out, is “yes”. In fact, one of the bigger shocks in my life was when I discovered that the way nature builds connections in response to voltage gradients matches seminal results in the field of machine learning on how to best make decisions or classifications. Thinking about this still gives me goosebumps because I appreciate what it means: there is a relatively clear path between physically self-organizing electronics and advanced machine learning processors. In other words, Ex Machina’s wetware brain is possible, and we have a pretty good idea how it all works.

During my time advising the DARPA Physical Intelligence program I met for the first time a man named Alfred Hubler. Meeting him is like traveling back in time to the period of Tesla when amazing contraptions were giving us a peek into a new and largely unexplored world. Hubler was an experimentalist, and one of his experiments was to place metal ball-bearing in a petri dish with castor oil and apply 20,000 volts. The large voltage is needed because the particles and distances are so large. The first time I saw this was in a live demonstration for government officials and advisors on a performer site-visit review in Malibu. When you see how the particles behave, you start to question your ideas about what life actually is.

It is not just that wires grow, although that is amazing to me. It’s how they grow, like little squirming tree-worms, branching and wiggling as they try to bridge the voltage gradient. Nothing quite prepares you to see totally inanimate matter acting as if it has purpose–as if it were alive. While I knew that such things were possible theoretically at that time, as I had mostly already developed AHaH Computing–it still shook me to the core to actually see it in real life. I was watching a machine built of metal parts and oil act as if it were alive and intelligent. A sort of physical intelligence, as the DARPA program it was being demonstrated under was all about.

So how do we get from Hubler’s Petri dish to the gel-based network in Ex Machina? While I can’t show you how to make it look exactly like a translucent blue crystal ball, I can show you how to build it with the technology we have now. I can explain how this new form of ‘wetware’ works in principle because that’s what the theory of Anti-Hebbian and Hebbian computing is all about. It is about finding a way to use Nature as Nature itself does. To not just compute a brain but to actually build one. To find a way for matter to organize itself on a chip to solve computational problems.

The driving physical engine to a learning processor is structural reconfiguration. Adaptation is not computed. Rather, physical circuits actually move and adapt in response to potential energy gradients. This is what makes the learning processor powerful. By reducing adaptation to a physical process we no longer have to compute it–we get it for free as a by-product of physics. Memory and processing merge, voltages and clock-rates drop and power efficiencies explode. AHaH Computing is about understanding how to build circuits that adapt or learn to solve your problems and, as a result, dissipate more energy ‘as a reward’. The path to maximal energy dissipation is the path that solves your problem, and the result is that Nature self-organizes to solve your problem.

What we have managed to understand so far is simple because it has to be simple. We can’t jump straight from where we are now to a fully 3D self-organizing iridescent orb. We must plug-in to the existing technological backbone and add only those pieces that are missing. That’s what we are doing with kT-RAM and the KnowmAPI. Rather than particles in liquid or gel suspension we have ions in a multi-layered glass-like structure above traditional CMOS electronics. Just like Hublers Petri dish, conductive pathways are evolved within the structures–they are just much, much smaller.

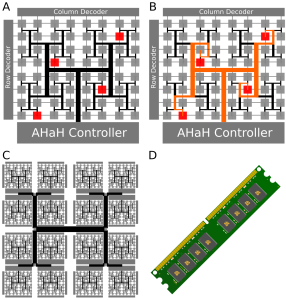

kT-RAM is a general-purpose learning co-processor with no topological restrictions. Alternate structures like crossbars can be more dense but suffer from significant topological and signal-driving constraints. There is no right answer–many architectures are possible and will be developed for specific uses.

All the technologies needed to create a learning processor have arrived. It is going to happen and it is only a matter of time. While i’ve been focused on the learning problem, other groups have focused on the routing issues. Some have converged on core grid structures, while I favor hierarchical and self-similar routing architectures. In the end it does not really matter. What matters is that the technological building blocks we need are here and ready to be put together. It is more a problem of human organization, money and politics than it is a technological one. That may sound crazy, but just consider some facts. Vision systems have met and slightly surpassed humans in some domains like facial recognition (Facebook). Natural language processors have beat world experts in Jeopardy (IBM Watson). Cars are driving themselves. When you combine these algorithmic successes with the availability of neuromorphic and neuromemristive hardware you get a technological revolution unlike anything we have yet seen. The stakes are huge, and the first participants to achieve general-purpose machine intelligence backed by wetware will likely dominate computing and perhaps a great deal more.

The computers we have today are amazing, but they are nothing like the self-organizing world of living systems and brains. Computers today are a tiny part of what is possible. Due to an amazing convergence of technological capabilities we suddenly find ourselves looking out into a new world and taking our first steps. The rewards will be staggering–and the consequences of being left out terrifying. Machine intelligence is possibly the last technology humanity will invent. After this critical moment technology can invent itself.

24 Comments

endthedisease

So how far off are we from something like this?

Alex Nugent

There are no longer any technological barriers in terms of device fabrication or integration, and the intellectual barriers are falling very rapidly. AHaH Computing has made a mapping from basic memristor circuits to foundational machine learning operations. The machine learning field has rapidly attained human-level performance on a number of problem domains, and very different approaches are pointing toward similar solutions–a scientific phenomena called consilience. No one group has the full solution (yet)–but the parts all exist and are rapidly coming together.

Matthew Schiavo

That didn’t really answer endthedisease’s question; can you estimate how far off, in years or decades, we are from producing a practical, commercially-viable application of this technology? I’m not talking about a fully-realized “wetware” brain (a la Ex Machina), but a proper, albeit primitive, neuromemristive computer that can demonstrate adaptive machine learning in real-time?

Alex Nugent

The major uncertainly points are people, politics and money and not technology. I cannot estimate those, so I can’t give you an answer with any confidence. If you would like to evaluate our memristor technology, order some memristors and test them. If you want to better understand the theory, check out our FAQ and links.

Myles

I’ve went down the rabbit hole of about a dozen of your articles by now and I have to say, this is by far one of the most inspirational and thought provoking pieces on AI to date. In this field, most people aren’t saying anything that hasn’t already been said regarding neural networks and machine learning, so this was quite refreshing. As an aspiring computer scientist in a burgeoning field, I truly hope to see you guys at the forefront of artificial intelligence.

steven

what do you mean that this technology will be our last invention…is it capable of creativity?

Alex Nugent

First, please be mindful of how your question has changed what was said:

That said, yes, I believe (strongly) that machine intelligence will be (and in some examples already is) capable of creativity. A general intelligence (such as a human brain), with power and space efficiencies comparable to biology, will possess the ability to have creative thoughts and act in the world to attain objectives. This has led some to make warnings about a potential existential human threat (See Bostrom’s book, for example). We feel these warnings are warranted but stress that such technology does not currently exist. However, it is being developed at a rapid pace that many do not appreciate. The time to raise alarms and ask questions is before efficient AGI is built, not after.

steven

Thanks…is it possible to make a learning and thinking robot servent with this tech?

Alex Nugent

The only problem with your question is the “thinking” part. So far as I know, there is no generally-agreed definition of what “thinking” actually is. If I could change your question to:

“is it possible to make a learning robot servant with this tech?”

Then my answer is “yes”.

alex

Why wouldn’t the concern be to find/create a substrate (like the structured gel from Ex Machina) that has a similar plasticity to the brain when an electric current is run through it, or is it not this simple?

Alex Nugent

Alex–the concern is definitely to find/create a substrate that has plasticity like the brain, but as you guessed-its not that simple. Brain plasticity is immensely complex and not well understood. See this post for example. Rather than mimicry, we are focused on achieving primary performance parity with state of the art machine learning algorithms, while reducing synaptic integration and adaptation to analog operations via AHaH Computing. In time, electronics will exploit methods of self-assembly from liquid or gel suspension to grow and adapt connections as well as assemble modules in 3D. However, before that time we have to bootstrap from where we are and we have to tie into the current technological backbone. In that respect, we have already identified general plasticity mechanisms capable of supporting machine learning functions (Knowm & AHaH Plasticity), how to map this plasticity to circuits (AHaH Computing), and how to build devices that support bi-directional voltage dependent incremental response (Knowm Memristor and here for some context).

alex

What will the transition from AHaH Computing to self-assembly from liquid or gel suspension look like? Will it be a linear development, or are other shifts in technology needed to achieve this?

Alex Nugent

I would phrase the question like: “What will the transition from BEOL CMOS memristors to 3D self-assembly from liquid or gel suspension look like?” AHaH Computing is not separate from ‘self-assembly from a liquid’–its a theory for understanding how to compute from AHaH Nodes, and these can be built top-down or grown bottom-up. The transition (if it occurs) could take a number of forms, but one possibility is that it will start with 3D chip stacking, where wires are assembled via a liquid between the layers of stacked silicon chips. Another route would be in the self-assembly of ‘circuit fragments’. It’s also possible that top-down methods of manufacture with memristors, CMOS and silicon photonics could out-perform a self-assembly approach at some scales.

alex

Any recommended reading on the subject or current research that is exploring this avenue of technology?

Alex Nugent

This stuff spans a lot of disciplines. Here for a curated list of public resource from us. Knowm Library for some good books. If you are into the liquid/particle assembly, Nanoelectromechanics in Engineering and Biology may be a good place to start.

may be a good place to start.

alex

So there are little applications as of now when it comes to gel-based neuromemristive/neuromorphic systems?

Alex Nugent

I would put it differently. I would say there is an international arms race to achieve adaptive learning autonomous controllers. The first groups to do this and beat digital systems, be it with gel-based or something else, will be rewarded with a world filled with applications.

alex

I am guessing you’ve heard of arbortrons. Why do you think there is such little interest in them when it comes to intelligent systems?

Alex Nugent

I believe I first heard of “Arbortrons” from Alfred Hubler in the Physical Intelligence (PI) program, although I forgot the name until you mentioned it now. I helped to create and advised the PI program and I believe, and still do, that Alfred Hubler was they only person in that whole program who was unambiguously demonstrating physical intelligence. When his work contract was canceled, I was angry and extremely disappointed with the project management.

Arbortrons, as implemented with ball-bearings in castor oil, or in numerical simulations, are impractical for real-world problems solving. The nano-scale is another matter entirely, and in some ways modern memristors could actually be seen as very small version of what Dr. Hubler demonstrated. The reasons they are not popular, IMO, is because (1) people do not know about them and (2) they have not yet been demonstrated to solve problems at levels competitive with modern methods of machine learning. I believe that will change. I also believe that they give us insight into the fundamental nature of physical intelligence: the self-configuration of matter to dissipate energy. If anybody deserves to have a lot more research funding, it’s Dr. Hubler.

alex

And I think, correct me if I am wrong, that there is very little research into the nano-scale systems.

Alex Nugent

Depends on what you mean with Arbortrons. There is the whole field of Dielectrophoresis and electrophoresis, but its mostly been used as a mechanism for nano-scale manipulations. For example, this book.

Oliver

Excellent article… Stumbled across this as I also watched Ex-Machina and something struck me about how the wetware \’brain\’ construction could be, by far the most realistic approach to creating an artificial neural network. I also ended up watching the whole of Alfred\’s lecture (what an eccentric chap!). As someone that works with servers and IT networks I always think that we as humans have a tendency to over-complicate designs and network architectures. Nature has an inherent ability (as Alfed shows) to choose \’the path of least resistance and efficiency\’, something that us humans struggle to do when we actually THINK about doing it. I watch Alfred talk about how 1000 nano particles could fit into 1 human neuron and its obvious that we as humans are only ever going to be able to create the very basic fundamentals of what will eventually be a super intelligent AI. So that said, do you think that we need to not THINK too much about how we create the foundation of an atomic neural network in terms of its operation (scenes as though we don\’t even understand how our own brain works) and instead just create \’something\’ based on how nature operates, i.e. throw a trillion nano particles into some gel, somehow hook it up to the internet and let it figure out how to configure itself?

Oliver

Excellent article… Stumbled across this as I also watched Ex-Machina and something struck me about how the wetware ‘brain’ construction could be, by far the most realistic approach to creating an artificial neural network. I also ended up watching the whole of Alfred’s lecture (what an eccentric chap!). As someone that works with servers and IT networks I always think that we as humans have a tendency to over-complicate designs and network architectures. Nature has an inherent ability (as Alfed shows) to choose ‘the path of least resistance and efficiency’, something that us humans struggle to do when we actually THINK about doing it. I watch Alfred talk about how 1000 nano particles could fit into 1 human neuron and its obvious that we as humans are only ever going to be able to create the very basic fundamentals of what will eventually be a super intelligent AI. So that said, do you think that we need to not THINK too much about how we create the foundation of an atomic neural network in terms of its operation (scenes as though we don’t even understand how our own brain works) and instead just create ‘something’ based on how nature operates, i.e. throw a trillion nano particles into some gel, somehow hook it up to the internet and let it figure out how to configure itself?

Alex Nugent

Oliver–yes and no. We do have to think, a lot actually, but not in the way people typically think. Not about how to solve a specific problem, but how to incentivize nature to solve it for us using physics. The overarching principle is that nature is self-organizing itself to dissipate free energy. That’s ‘the objective function of nature’, and honestly it does not really care that its doing anything useful for us in the process. So if we just threw a bunch a particles into a gel and hooked up a few electrodes to the internet, it might look cool (if we are lucky) but it may not (probably will not) do anything useful for us. The trick, which is still an open problem, is to get in the way of this process without overly constraining natures degrees of freedom. That is, we have to make it such that the only way for those particles to access free energy dissipation is to solve our problem. The way we do this with current technology makes it impossible for energy to be dissipated any other manner other than what we intend, i.e. “the program”, which completely constrains the self-organizing aspect of nature to the point where it can no longer operate for us.

You may want to read about knowm and thermodynamic computing.

If we understood natural self-organization better, we could add those minimal constraints such that it would be possible to throw a colloidal suspension of nano-particles into a gel matrix, attach electrodes, hook it up to a body and watch as it (literally) came alive. That begs the question…what is life? How are those particles assembling themselves any different than life as we know it?