The Knowm team attended this year’s NICE workshop at IBM Almaden, sponsored by Semiconductor Research Corporation, IBM and the Kavli Foundation. The conference was filled with a good sampling of the modern celebrities of neuromorphics and representative of the entrenched government, corporate and academic interests in the space, who are clearly starting to the feel the pressure from the machine learning space.

Knowm Team NICE 2017

The conference started with introductions from conference chair Murat Okandan and IBM-Almaden director Spike Narayan. Murat recalled HGWells World Brain and asked the question: What are we going to use these tools for? Mr. Narayan was more pragmatic, discussing a direction toward computing at the edge, helping humans do their jobs better, and trying to draw a distinction between “Machine Learning” and “Machine Intelligence”. We found the ML/MI distinction arbitrary and indicative of IBM technology name-branding (ala “cognitive computing”).

NICE Highlights:

- True to DARPA’s continual drum-beat after the launch of the SyNAPSE program, another program (Lifelong Learning) is coming that aims to resolve the catastrophic forgetting problem found statistical learning methods such as neural networks. Program manager Hava Siegelmann gave an overview of her vision for the program which would involve three areas. (1) Software architectures, algorithms, theories, supervised and unsupervised reward-based learning. (2) Physical principles including learning from nature and transfer to machine learning. (3) Evaluation (by government team).

- Karlheinz Meier presented for the Human Brain Project representing the funding agency and himself as performer (entrenched much?).

- Kwabena Boahen gave an engaging presentation with great animations and revealed the neuromorphs five-point ‘secret’ master plan:

- Implement dendritic computation with sub-threshold analog circuits to degrade gracefully with noise.

- Implement axonal communication with asynchronous digital circuits to be robust to transistors that shut off intermittently.

- Scale pool-to-pool spike communication linearly with the number of neurons per pool, rather than quadratically.

- Distribute computation across a pool of silicon-neurons to be robust to transistors that shut off intermittently or even permanently.

- Encode continuous signals in spike trains with precision that scaled linearly with the number of neurons.

- Sankar Basu gave an overview of NSF’s efforts in the Nanotechnology Initiative, BRAIN Initiative, and National Strategic Computing Initiative (NSCI). Of interest was a map showing the selected groups for the SRC co-sponsored E2CDA program, which was completely void of small businesses–America’s technology engine. This is due to SRC’s IP acquisition strategy, which requires all program-generated IP, including all background IP, to be made available to SRC-member companies in perpetuity. This is a good example of government-corporate partnerships that only benefit the big corporations (in this case SRC member companies).

- Robin Degraeve, a researcher at IMEC, gave an overview of an interesting RRAM based processor for unsupervised temporal predictions that attempts to work around the stochastic properties of oxygen vacancy RRAM.

- Sandia National Labs was heavily represented, with six speakers talking about “Spike Temporal Processing Units, neuromemristive hardware, Hippocampus-inspired adaptive algorithms and game theory.

- Joint Stanford and Sandia National Labs work to achieve low-voltage artificial synapses with a three-terminal technology they call ENODE, or more verbosely poly(ethylenedioxythiophene):poly(styrene sulfonate) modified by polyethyleneimine vapor. The selling point here is that the energy barrier for state retention and state modification are decoupled, achieving low switching energies while maintaining non-volatility. This three-terminal technology has its roots in the ‘memistor’ work by Bernard Widrow in the 1960’s.

- Jeff Hawkins gave an energetic talk about his new idea that all cortical elements are encoding allocentric information, while avoiding a detailed description as to how this allocentric information comes to be. Hawkins claims:

- All sensory modalities learn 3D models of the world.

- All knowledge is stored relative to external (allocentric) reference frames.

- It is not possible to build intelligent machines without these properties.

Jeff Hawkins NICE 2017

- A very tired Ruchir Puri gave an overview of the IBM Watson system covering four levels: Cloud and infrastructure services, content, cognitive compute, conversation applications.

- Narayan Srinivasa (former HRL program manager for DARPA Synapse, PI and UPSIDE programs) discussed the relationship of energy and information from a neuroscience perspective. Of interest was his review of the work of Levy & Baxter and discussion of energy efficient spike codes. The idea is that depending on the ratio of active to fixed energy costs, the optimal spike code changes.

- Wolfgang Maass gave an inspired talk about synaptic genesis and how common models on neural network learning are incomplete or just plain wrong.

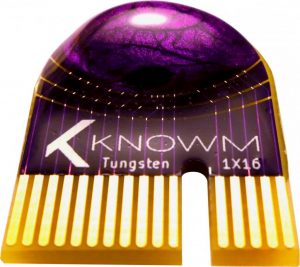

- Tarek Taha and Miao Hu from HP Labs each presented their work in neuromemristive processors and devices, showing great progress on device development and memristor pair synapses.

Miao Hu NICE 2017

While Knowm did not present at NICE this year, Alex was invited to speak at Mentor Graphics the very next day. In the following video, Alex discusses various aspects of AHaH Computing including motivation & history, basic building blocks and mechanics, stem-cell logic and pattern recognition and the emerging open-source software tools for VLSI chip design and simulation. Alex ends the talk showing incremental conductance changes with Knowm memristors and the new Knowm Memristor Discovery tool.

1 Comment

Tim Molter

Check out our new blog post: https://blog.ai-receptionist.com/blogs/ai-receptionist-pet-grooming-benefits.html It’s been a lot of work setting it all up and we’re really proud of the new product using AI and ML tools to create a human-like receptionist service.