The sixth annual Neuro Inspired Computational Elements (NICE) was hosted by Intel at the Jones Farm campus in Hillsboro, Oregon, February 27 to March 1. It featured talks on Intel’s new neuromorphic chip arrival “Loihi” as well upgrades to the Human Brain Project sponsored BrainScaleS-2 and SpiNNaker systems and a slew of talks from regulars and a few newcomers.

Day 1

Mike Mayberry

(CTO/Managing Director of Intel Labs)

Focus

-components research

-keep Moores law going (by changing the definition)

-novel integration

Multiple possible futures

-better devices (phone/pc/etc), natural interfaces

-electronics disappear into infrastructure

-cloud computing

Intel Labs research agenda

-Communication

-Compute

-Systems

-Moore’s Law

-Security

-Sense Making

-Novel Integration

IOT connectivity

-accurate positioning & mapping

-highly reliable connectivity link

-environmentally context aware

-distributed intelligence

Autonomous Driving

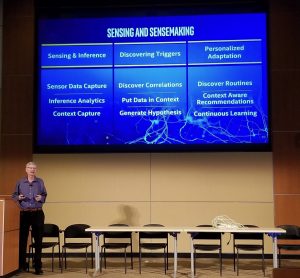

Sensing and Sense making

Adaptive Learning Research

-Personal Adaptation and being able to adapt is really needed

“We want to get to natural intelligence, something that can handle ambiguity”

Reconfigurable Compute: “unlocking the power of heterogeneous computing”

Neuromorphic Systems: “100X improvement in power compared to traditional methods”

Quantum: “a place holder”

Brain Decoding “our most exotic project”

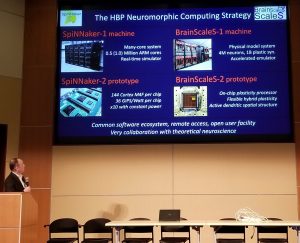

Karlheinz Meier

(presenting for Human Brain Project)

Review of various platforms

-Spinnaker-1 machine

-Spinnaker-2 prototype

-BrainScales-1 machine

-BrainScales-2 prototype

Hava Siegelmann (Presenting as a Sponsor)

Lifelong Learning Machines: Resolve the Stability-Plasticity Dilemma

Machine Learning is more like Machine Training right now

-Continual Learning–systems capable of learning during execution

-Adaptation to new task and circumstance–applying previously learning skills to novel situations without forgetting previously learned tasks

-Goal-Driven perception–understanding input signals from mission view

-Selective plasticity–balance stability and plasticity

-Safety and monitoring

Kaushik Roy

Center for Brain-Inspired Computing: SRC with lots of funding from DARPA

-computational efficient algorithms

-theory of neuro computing from DNN to emerging models

-learning with less data

-incremental and lifelong learning

-algorithms that leverage stochastic and approximate computation

-learning to forget with adaptive decay

Craig Vineyard

Sandia National Labs

Spiking Neuron Implementations of several fundamental machine learning algorithms

Can NICE delivery on the hype?

Review of universal function approximation

Neural Module

-block of P neurons

-neurons have various parameterizations

-inputs are linear combination so external inputs plus a bia signal

-etc

Spaghetti Sort, Sorting integers, Min, Max using temporal coding

Spiking Similarity Search

-kNN

Adaptive Resonance Theory

Particle Image Velocimetry (PIV) Cross-Correlation

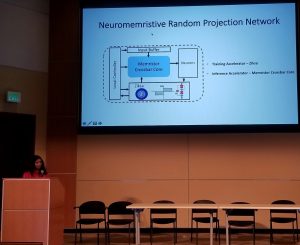

Dhiresha Kuditipudi

RIT

On Device Intelligence with Deep Random Projection Networks

-Mention of Google’s “Smart Messaging System”

-80% of data is unstructured

-Large Portion of neuromorphic chips are focused on Deep learning

-Shortage of simple, robust and fast learning algorithms for on-device intelligence

-Lack of symbiosis with the device architectures

Random Projection Networks

-Map input into higher dimensional space where inputs are separable

Review Extreme Learning Machine / No-Prop Network

-map input space into high dimension space

-only train last layer of weights

-multi-class regression & classification

-good generalization

Digital Architecture of random projection network (review of prior work)

-Neuromemristive Random Projection Network

-Synthesized for IBM 65nm process

-Taped out test chip 6 months ago

-Convolution Drift Networks

-Video activity classification

Jin Ping Han

IBM

Bioinspired Dynamic Frontend for spike based speech recognition

review difficulty of “operating Alexa in a noisy environment”

review of study of bat echolocation & ear motion

(see slide)

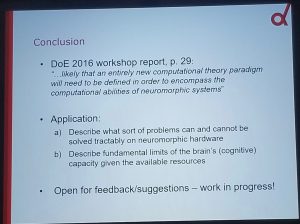

Johan Kwisthout

Radboud University

Neuromorphic Complexity Analysis

Where do we fit?

-what sort of problems are efficiently solvable on a neuromorphic computer?

-are there problems different/the same as the problems efficiently solvable on the Von Neumann Architecture?

Rational

-non-von Neumann arch

-energy as a bounded resource

-analog spiking behavior

-noise/randomness

Proposed Computation Framework. Key Aspects:

-colocated memory and computation

-learning and adapating

-noise used as a resource

-spike behavior

Open issues and research questions

-relationships with tradition models

-complexity classes criteria

-structural complexity theory

notions of acceptance criteria

-stability of distribution

-time to convergence

-energy limitations

Conclusions: (see pic)

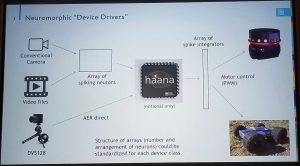

Fred Rothganger

Sandia National Laboratories

A neural inspired software stack

Generic OS Stack

-source file

-compiler

-loader

-operating system

BUT: neuro inspired devices are not general-purpose computers.

Neuromorphic “Device Drivers”

What is common across most neuromorphic architectures?

-leaky integrate and fire

-spike messages, with configurable weight and delay

Units may remain on the CPU because the are incompatible or because the device is full

Software stack automatically “migrates” compatible units onto STPU, up to its capacity

A language for neural computing

Three visions

1) Tensor Flow type networks

2) Spiking networks

3) Neuroscience Models

Where will novel algorithms come from in the future? (he thinks neuroscience)

What language will best express these algorithms?

-something more general than either tensors of LIFs

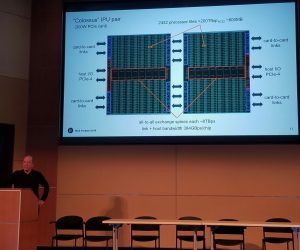

Simon Knowles

GraphCore

Designing Processors for the Nascency of Machine Intelligence

Small British Company (75)

“Machine Intelligence”–nothing artificial about intelligence

Nations which do not master it will fall far behind

programming & software & hardware will all change….but how?

A canonical intelligent agent is a bayesian probability sequence to sequence translator

The search for intelligence also needs something like evolution to explore model structures.

Knowledge models are high dimensional data, naturally represented as graphs

-the same graph is a natural partitioning for distributed inference computation

For machine efficiency, the structure of the graph can remain static during optimization.

Some types of structure search can also be cast as static graphs

our comprehension and mechanization of intelligence is nascent

-understanding of models and learning algorithms is changing rapidly

-huge compute required for model discovery as well as model optimization

Until we understand intelligence better, we need machines which…

-exploit massive parallelism

-are agnostic to model structure

-have a simple programming abstraction

-are efficient for both training and deployed inference

Good computer architecture bets for this new workload

-massive exposed parallelism

-large graphs are necessarily sparse;

-static structure allows compiled communication

-low precision arithmetic

-approximate inference on probabilistic models learned from noisy data

-physical data invariances provide some structural priors

-convolutions, recurrence

-noise generation

Silicon scaling is limited by power

Throughput-oriented silicon should be mostly memory

Silicon efficiency is the full use of available power

-distributed memory

-recompute what you cant memorize locally

-compile communications

-serialize communication and computer

Proximity is defined by energy, more than by time.

Poor return on processor complexity: Pollacks Rule

-processors performance ~sqrt(#T)

Pure Distributed Machine

-static partitions of work and memory

-Threads hide only local latencies (arithmetic, memory, branch)

-Compiled communications patterns over a stateless exchange

Distributed SRAM on chip instead of DRAM on interposer

We need to expose A LOT of parallelism

-much more than parallelizing over one maths kernel at a time

“Colossus” IPU

-all new pure distributed multi-processor built specifically for the ongoing discovery and early deployment of MI

-mostly memory “model on chip”

-a cluster of IPU acts like a bigger IPU

-stepwise-compiled deterministic communication under BSP

-programmable using standard frameworks or Poplar native graph abstraction

-TensorFlow,Pytorch,caffe2,mxnet

Bulk Synchronous Parallel

-Massively parallel programming with no concurrency hazards

-Communication patterns are compiled, but dynamically selected

To make a computer like this you need to be very good at load balancing

Serializing compute and communication maximized power-limited throughput

The important thing is to be able to compile patterns of communication

Andrew Sornborger

Los Alamos National Laboratory

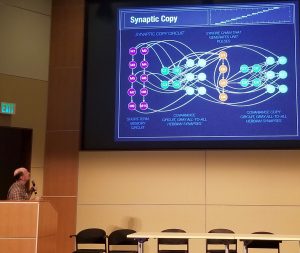

A pulse gate-mechanism for synaptic copy…

can we copy a synapse from one place to another

-HM example, memory consolidation

background: synfire chains

-synfire-gated synfire chains for graded information propagation

-how to compute with SGSCS

-learning

-transferring synapses

Synfire Chain: feedforward network of neurons, activation propagated from one layer to another

-Original hope: to propagate graded information with synfire chains

Synfire-gated synfire chains

-use neural populations in a conventional synfire chain as a pulse generator to push secondary populations above threshold

Implications

-separation of information control-gating pulses

-information content – graded firing rates

Hava Siegelmann (Presenting as a performer now)

Abstraction as a crucial enabling property to L2M

Symbolic Hierarchy across the human cortex

Inspiration

-Visual stream hierarchy

-deep neural networks form abstractions in deeper layers

Relating behavior and cognition with neural structure

-Functional MRI

-Big data: activation coordinates are associated with tasks

Fundamental principles of the human cortex reveal interrelated geometric hierarchies

1: connectome hierarchy in brain regions

2: behavioral hierarchy

3: aggregation of representation, cognition, & abstractions

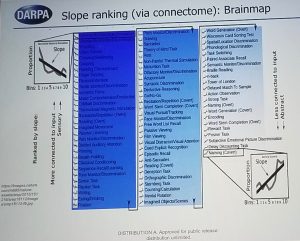

Looked at timing of activation of brain regions as a metric for computational time, where later activation is assumed higher-level, etc.

Tried to correlated behavior to fMRI

-count # of the behaviors activation instances per bin

-normalize activations by the total

-approximate with a line

-identify the slope

-The slope is negative for finger tapping (“bottom up”)

-The slope is positive for reasoning

Slope ranking of various activities

-There are some activity that appear more from “top down” and some from “bottom up” in terms of brain hierarchies.

Symbolic Continuity Conclusions

-fundamental principle: brain is structurally organized to produce behavioral abstraction

Next Directions

-check flow of cognitive behavior in health and under different situations

-could we find relations among similar behaviors

-…

Kathleen Hamilton

Oak Ridge National Laboratory

Sparse Hardware embedding of spin glass spiking neuron networks for community detection

Understanding network dynamics has many real world applications

-spread of epidemics through social networks

-failures on networks become catastrophic events

-how many baby monitors does it take to take down the internet

Analysis of graph structure

-shortest path between two points

-identifying or quantifying important vertices on a graph

-identifying communities in a graph

Our approach to using neuromorphic hardware for graph-related problems

Analyzing graph structure from spiking data

-What characteristics are needed

-what is useful output?

Hopfield Neural network

-constructing a network for graph bi-partitioning is straight forward

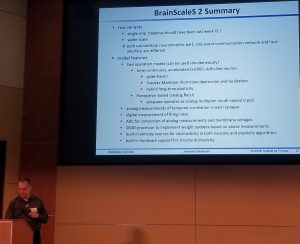

Johannes Schemmel

Heidelberg University

Toward the second generation brainscales system

Motivation for the brainscales neuromorphic system

-future computing based on biological information processing–>need model system to test ideas–>understand …?

Review of brain scales

-wafer scale event communication

Brainscales 2: hybrid plasticity introduced:

-software controlled local plasticity

-non-linear dendrites and structured neurons

-accelerated neuron model

time constants are much faster in silicon, leads to accelerated simulation

no multiplexing of components storing model variables

-each neuron has its membrane capacitor

-each synapse has a physical realization

Can use plasticity processing unit for other things, like calibrating membrane resting potential

-since its a “general CPU” ?

BrainsScaelS 2 supports different spike types

-emulations of all three spike types needed

-NMDA plateau potentials create non-linear dendrites

-Calcium spike as coincidence detection between basal and apical inputs

-Demoed coincidence detection using structure neurons

-summation of distal and proximate dendrite signals to push above threshold

-(See pic for review slide)

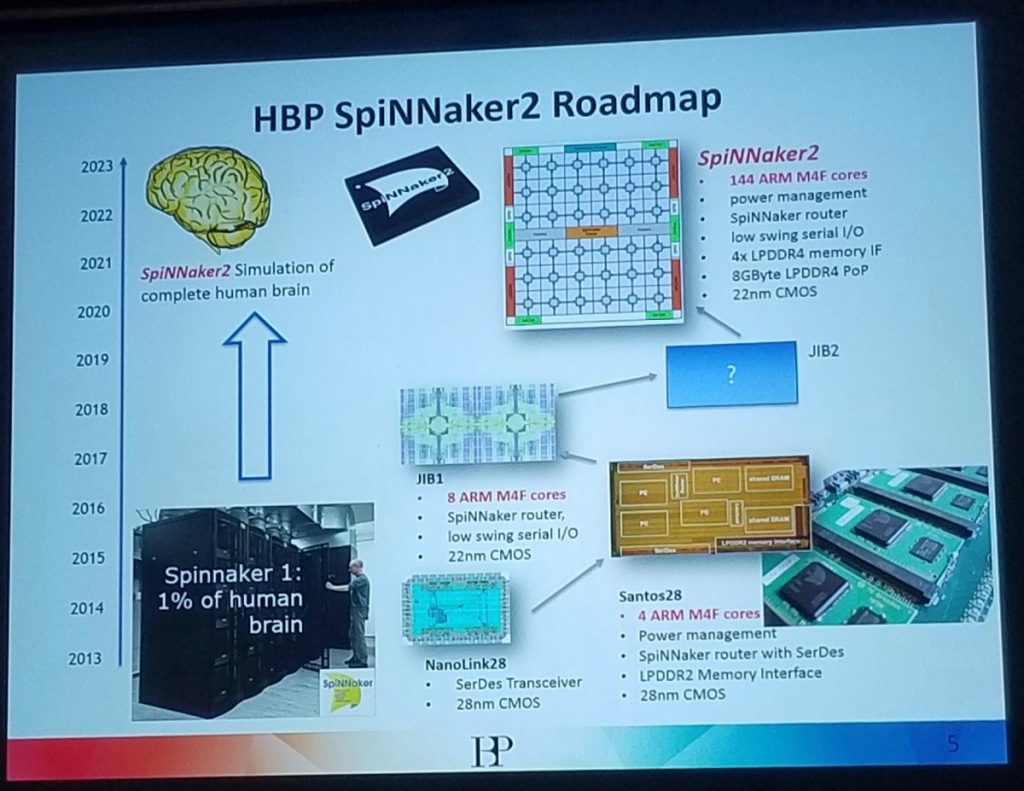

Sebastian Hoppner

TU Dresden

Spinnakar2

Review Version 1

-programmable many core ARM

-130nm CMOS

-“Broad” user base of 40 systems in use around the world

-spinnaker2 target: 22nm process 22FDX

Roadmap (see pic)

floating point support now available

Dynamic power management

memory sharing

-synchronous access to neighbor PEs

-Multiply accumulate

-neuromorphic accelerators

-network on chip

-adaptive body biasing

-old 12X12in board now fits on a single chip

Mike Davies

Intel

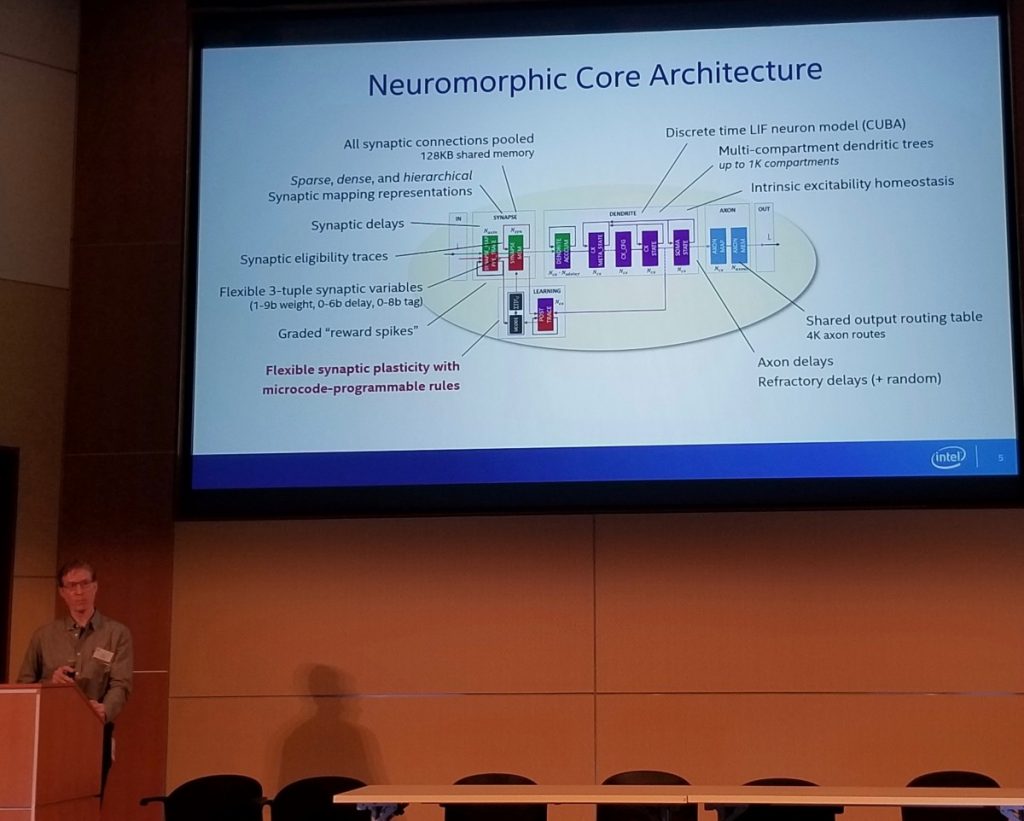

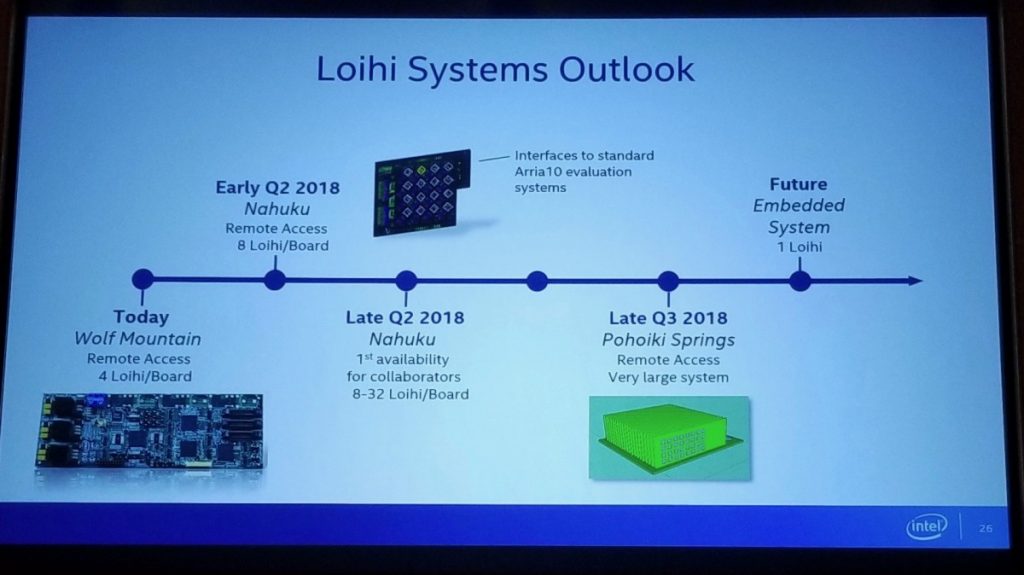

Loihi a brief introduction (more details coming)

128 neuromorphinc cores up to 128k neurons and 128M synapses with advanced SNN

Feature set

-Scalable on chip learning

-Supports highly complex neural network topologies

-Fully digital asynchronous implementations

-14nm FinFET process

Loihi is not a product, we are not selling it, looking for partners to develop software and applications

~20pJ synaptic inference

embedded x86 cores

-efficient spike-based communications with neuromorphic cores

-data encoding decoding

-etc (see pic)

Trace-Based Programmable Learning Rules

Day 2

Bob Colwell

Some observations about the near future of alternative computing technology

Does not agree with Mayberry–Moorse Law is ending and its obvious

Conventional digital CMOS is already good enough for lots of things

Can’t dislodge the incumbent by barely beating it

-would have to win by very large margin across board

-make something important feasible that was not before

NICE is going to have to learn to play nice with incumbent tech

-accelerators

-add-on facilities for servers

-add-on tech for conventional SoCs

-match market economics

There is a set of economics that is not visible until you try and enter it, then it becomes very clear very quickly

“Our tools for automatically allocating workloads is pretty terrible”

It is easy to design things that cannot be effectively programmed

What can we learn from computing history?

General purpose computing ruled for decade because of golden rule

-better perf on existing code + new apps & OS’s = $$Profits

-CPU perf/features got exponentially & predictably better over time

-CPU perf has stalled, and GPU perf will too, dur to thermals

NICE-accelerators will improve for a few years but Moores Law is still dead

-OS’s/SW got better (and demanded better CPU’s)

-new SW will take advantage of new NICE accelerators BUT

-out ability to program heterogeneous accelerators is poor

-what are the apps? those are what drive demand, not “capability” per se

-Platform improvements did not choke(PCI,QPI,USB,DRAM,buses,caches…)

-still some room for improvement here

-but DRAM is dying, system economics worsening

-overall system cost fell drastically due to huge unit volumes

-smartphone volumes are reaching saturation

-IoT volumes will dwarf smartphone, but there’s no profit there

-security issues have remained annoyances, not limiters

-there was a predictable future safeauarding today’s investments

General computing largely ignored efficiency

-MPEG-2 HW decoder 1000x > software decoder on CPU

-Tasks that are too much for CPU’s may be feasible with accelerators

-end of Moore’s law means you can no longer just wait around and faster machine will appear….only accelerators will enable certain classes of new apps

-what new apps? dunno. we never knew until they appears

Efficiency became 1st order concern in 2004 when sys thermals hit air-cooling limit

-industry answer: multi-core

Switch away from historic “design to be correct” to “design knowing there will be emergent behavior” and algorithmic errors/misuse

-emergent behavior is never in your favor

-it arises from system complexity

-the same system complexity that keeps most humans from understanding how the system really works or why it does what it does

There will be unintended “communication paths” in the systems you sell into

-Spectre/Meltdown

-RowHammer

-EMI,RFI,gnd/VCC coupling, intentional mistreatment by hackers

There will be failures, Software and Hardware

You must judiciously provide backup plans

-but don’t make usual error of treating them less seriously than main plan

-thinking you have a backup, when you actually don’t, is worse than having none

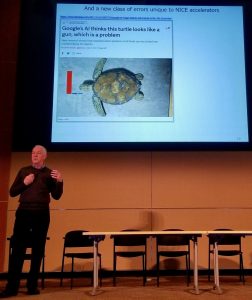

Googles AI thinks this turtle looks like a gun

-systems not designed to algorithmic limitations

-cant figure out why system errs or that it is erring

-And if a given system learns, its even harder to know what it will do.

Classifying an image that looks like noise confidently as a zebra is a big problem

Tragedy of the commons paths

-aka shared resources

-power supply, ground returns, EMI

-Thermals

-Security-related behavior

-manage these despite inevitable design errors

Thermals is the one I worry about most

-each hetero agent uses supply current, generates ground return current, & contributes to overall thermal load

What about “Machine Check”?

-after 40 years we have no standards in the area

The heterogeneous future is inheriting an ad hoc, crazy quilt

If you walk up to a CEO of an established company with a new idea and they do not own it, it will be perceived on the threat axis first.

If you get designed onto Apple’s platform, understand they don’t want you there.

Christoph von der Malsburg

Platonite

Dynamic Link Architecture

The state of Neural Computation is dominated by deep learning on GPU

No need for neuromorphic computing currently

The future: artificial general intelligence

The scope issue

-present systems have very limited scope

-human intelligence encompasses our whole life!

Conceptually, AI has made no progress since…list of big names

There is a Roadblock!

-The Neural Code Issue: how does neural tissue represent mental phenomena

Classical AI: Bits

-completely general

-to be generated/interpreted by algorithms

-intelligence only in programmers mind

-AGI beyond the economic power of the world

Artificial Neural Networks: neurons

-neurons as logical propositions

-each decision needs ta dedicated neuron

-missing concept: compositionality

-lack of expressive power

-deep learning needs too many examples

Conclusion: bits are too general, neurons are too narrow. AI is mere shadow of human thought

The Human Model

-one GB of genetic information suffices to make the brain

-one GB of virtual reality would suffice to train the brain

-Simple nursery

-200 million eye blinks over three years

3 year old children:

-grasp their environment

-navigate, manipulate

-act purposefully

-speak

-learn from single inspection

Embodiment

Situatedness: local in space and time, no global maps, no big data

Conflict with Classical Computing

-programming bottom-up, not top-down

-impossible to know the actual state of the system due to non-deterministic, asynchronous operation

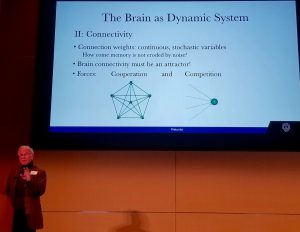

The Brain as a Dynamic System

-neural activity: continuous, stochastic variable, brain state trajectories are attractors, stabilizing forces

Connectivity: connection weights, continuous, stochastic variables

-How come memory is not eroded by noise?

Brain connectivity must be an attractor!

Forces: cooperation and Competition

Network Self-Organization

-1 petabyte of information in connectivity!

Attractor Nets: neural fields and topological mapping

-neural sheets

-shape, pose, texture, illumination, motions, etc

Comprehension by Abstraction

-“Scene”<-->“Schema”

See review slide pic

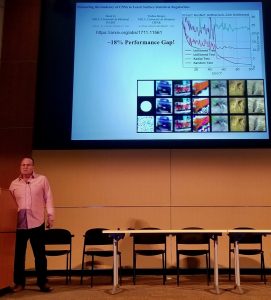

Garrett Kenyon

How do brains learn about the physical world?

Took picture of occluded suitcase and gave to google, which labeled it as a “floor” and gave similar images as traffic accidents, streets, etc.

Imagenet LSVRC02017, current best algo is 20% error

Bengio Paper: Measuring the tendency of CNNs to learn surface statistical regularities

https://arxiv.org/abs/1711.11561

What are we missing?

-lateral inhibition ( leads to baysean inference)

Reconstruction example

Stereo Features Examples

Retina Example with gamma waves

Wolfgang Maass

Networks of Spiking Neurons Learn to Learn

L2L/Metalearning has been discussed for decades

Only recently with sufficient computational power being available, it has become an important tool in ML

Review Standard L2L Framework

-consider a family of learning tasks

-the first art is to define F in such a way, that L2L produces a desired result

-the second art is to define the fitness function

-the third art is to choose the right NN and the right set up HPs

An essential difference to the standard practice of ML: testing is not carried out for a new examples from the same learning task, but for new examples from a new learning task from the same family F.

Choose hyper parameters so that they define all aspects of learning in the neuromorphics device N

Use benchmark challenges that were proposed for L2L applied to non-spiking recurrent ANNs (LSTM networks).

-See slide for implementation details

Ported L2L framework on the HICANN-DLS chip

L2L Benchmarks on RL tasks

-navigation to a goal G in a random mazes of a given size

-random MDP of a given size

Choosing a good optimization algorithm for the outer loop is essential

-Evolution Strategies

-Cross Entropy Method

-Simulated Annealing

Proof of concept: one-arm-bandit task, 11 machines, with last machine giving a coded signal for the highest reward machine in the other 10. Can it discovery the hidden code?

-after 500k trials, learned to efficiently explore family of functions, …?

SNNs an also learn-to-learn from a teacher

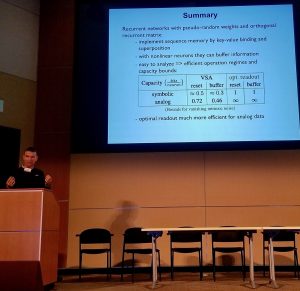

See Summary Slide Pic

Weinan Sun

Janelia Research Campus

Ion Channels, active dendrites, and computations

Pyramidal neurons are important because of prevalence in cortical circuits

Neurons are lipid bilayers in a salt solution

Membrane proteins are fundamental for electrical properties

input-output function of a neuron

-receive input current h

-transform through a function f(h)

-induce action potential

Process is an alternation between analog and digital signaling

Two key components shaping input processing in neurons

-ion channels and dendrites

membrane ion channels

-cell membrane 4nm thick

-over time/evolution, different ion channels inserted

-NaV, CaV, NMDAR, AMPAR, TRPM4, GIRK

“ion channels are the basic computation elements in neurons”

Three key players during input transformation

-voltage gated Na+ channel

-voltage gated K+ channel

-NMDA receptor

Common feature: voltage dependance

Dendritic Na+ spike

-Possible computational explanation: Gating of distal input by proximal input

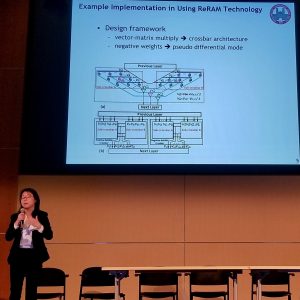

Xuan Zhang

Washington University

NeuADC: Neural network inspired architectures to exploit learning for A2D conversion

Motivations: Deep learning framework, Brain inspired model

One missing line: homogeneous units for hierarchical processing across the physical and information boundary (analog to digital boundary)

Application scenarios

-internet of things

-ultra-low power sensing and monitoring

-smart and autonomous systems

-high-bandwidth multi-modality sensor fusion

Electronic design automation (EDA)

-analog and mixed signal design automation challenge

-ADC is one quintenssential example

NeuADC-A new paradigm

-unifying design and optimization

-universal interfacing block

Mapping ADC to NN

“Have to play tricks with the encoding scheme”

-use a smooth code to prevent transitions

Universal Interface

-reconfigureable hardware substrate

-trainable across modalities and applications

-potentially extend to other AMS blocks

Bill Aronson

AIRG

Proprietary neural network

focus/attention based

example of collision avoidance given

3 hidden layers

“The Matrix”

Hardware Reservoir Computer

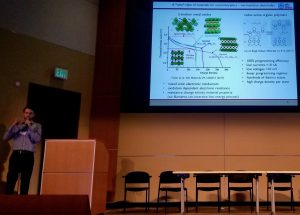

Ellio Fuller

Ionic floating-gate memory as an artificial synapse for neuromorphic computing

potentially very large gains for MAC operations

scalable to large arrays

desire linear programmable

RERAM:

time-voltage dilemma, leads to large voltage drop across crossbar

Investigate three-terminal device to overcome challenges

-mixed ionic electronic conductors

oxidation dependent electronic resistance

resistance change intrinsic material property

implementing into transistor-like configurations

-control gate is a volatile filamentary switch

-filament forms, injects charge, then breaks and preserves charge on gate

devices are programmable down to 400mv

can support massively parallel training operations

demonstrate low read currents, below 3nA

1M cycle endurance

first things to demonstrate: speed and scaling

scaled device pulse time looking at, maybe a MHz

Abbas Rahimi

Efficient biosignal processing with brain-inspired high-dimensional computing

A universal ExG Classifier

Brain-inspired high-dimension computing

Superb Properties

-General and scalable model of computing

-well-defined set of arithmetic operations

-fast and one-shot learning (no need of back prop)

-memory-centric with embarrassingly parallel operations

-extremely robust

…

What are HD vectors?

-high dimensional, holographic, psudorandom with i.i.d components

-can be combined(*,+,p) and compared (distance)

Identical hardware for both learning and inference

Campbell Scott

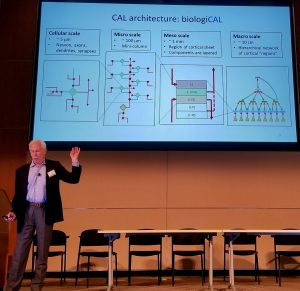

Introducing CAL: Context Aware Learning

Goal: design a robust system capable of learning by multimodal observation, continuously and unsupervised, predicting, ultimately making decision and acting on them

Design

-neurologically inspired

neurons in mini-columns, cortical layers and hierarchy

driving and modulating synapses, hebbian updates

stable network via homeostatsis

avoid catastrophic forgetting

-Simplicity

binary neurons and synapses, sparse neural acivity, sparse synapses connections

a few canonical functions: correlation, sequence learning, feedforward with termporal pooling

-Importance of time

learn to predicts

Results

-emergent invariance

-context for current input provided by modulating synapse

“its a network of networks”

Cellular Scale-single neuron

-lateral dendrite (modulating)

-basal dendrite (feed forward)

-apical dendrite (feedback modulates)

Microscale -mini column

-basic neural circuit

-correlator is L-IV cells

-driven by synapse on basal dendrite

Sequence memory is (layer of) mini-columns

-mini-column defined by common L-IV cell

-L-II/III cells connect laterally to modulate

Meso Scale

-region of cortical sheet

-components are layers

-structural differences

-functional differences

-similar over entire cortex

Macro-Scale

-hierarchical netowrks of regions

-connected by fibers

-feed-forward and feedback

-direct or routed via thalamous

Threshold modulation – provides context

Synapse Updates are Hebbian

-only potential synapses. based on activity, new ones are made

-stabilize by principles of homeostasis

“conditional Hebb”

-if axon or dendrite has excess connections, do not strengthen

-if axon or dendrite has too few connections, do not weaken

Do not forget – long term memory

-nonlinear Hebb to avoid “catastrophic forgetting”

-reduce permanence decrements for well established synapse

-results in two population of synapses: plastic and permanent

Fritz Sommer

UC Berkeley

Working memory for structured data in neural networks

“Attempt to summarize a 40page paper in 18 slides”

Distributed representations in the brain

simple cell (primary visual cortex) –> grid cell (entorhinal cortex)

Computing with distributed representations

-neural networks

– synapses, neurons, …

-vector symbolic architecrtures (VSA)

-hyperdimension computing

-representation of symbols-pseudo-random vectors

-key-value binding

create keys on the fly

representation of item set

-reservoir networks

Constantine Michmizo

Brain-Morphism: astrocytes as memory units

Good review of computational neuroscience over the years

dogma that brain equals neurons is highly prevalent

Good reason: neurons are electrically active and we can manipulate them

up to 90% of brain cells are not neurons, they are glia cells

-they are electrically silent

until recently we could not see function of astrocytes

astrocytes form their own communication network, and interacte with neuron synapse networks

Two opportunities:

-Create computation models of astrocytes

-Create neuro-astrocytes networks

How to model?

inter-cellular Ca2+ wave propagation in space and time

How astrocytes control neurons

Tripartite Syanpse

-hears what neuronal component is saying

-modulates the neuronal component by inhibiting pre-synaptic or injecting current into post-synaptic neuron

Could explain some brain (delta) waves?

Goal was to find a learning rule that would generate a transistion between memories (in a hopfield network)

“Learning is expanding beyond weight change”

astrocytes can sense and impose synchronicity in small populations of neurons

Day 3

Mike Davies

Introducing Loihi

The case for neuromorphic computing: emerging computing workloads demand intelligent behaviors that we do not know how to deliver efficiently with todays algorithms and computing architectures. Examples:

-online and lifelong learning

-learning without cloud assistance

-learning with sparse supervision

-understanding spatiotemporal data

-probabilistic inference and learning

-sparse coding/optimization

-nonlinear adaptive control

-pattern matching with high occlusion

-SLAM and path planning

Potential Future Applications: robotics, HPC systems, neuroprosthetics, smart glasses

Research Goals

-Broad class of brain inspired computation

-Efficient hardware implementations

-Scalable from small to large problems and systems

Intersection of conventional ML, brain-inspired computational algorithms, competitive computer architectures

going after spiking neural networks

-Nature has come up with something amazing. lets copy it…

-Not so simple–very different design regimes

We want to rapidly reprogram the technology, which biology cannot achieve.

Objectives and constraints are largly the same

-energy minimization

-fast response times

-cheap to produce

Interconnect is relatively straight forward

“A series of little table lookups”

One compelling example: LASSO Sparse Coding

-LASSO Optimization using the spiking locally competitive algorithm

-We can get to 1% of the optimal solution much faster than conventional approaches;

2D Mesh, Packetized spikes, high fanout required, low overhead synchronization

But how to scale to large LCA problems?

-Answer: patch-based connectivity reuse

-analogous to the “convolution” in convnets

-sparse coding results: 5000X better energy-delay product when compared to Atom CPU

Learning with Synaptic Plasticity

-local learning rules-essential property for efficient scalability

—>compatible with biological plausibility

-Should be derived by optimization an emergent statistical objective

—>too much directionless experimentation otherwise

-Plasticity on wide range of time scales is needed

—>Delayed reward/punishment responses, eligibility traces

-Reward spikes may be used to distribute graded reward/punishment values to a particular set of axon fanouts

“People have been playing with STDP a long time and it has not led to anything”

Trace-Based Programable Learning

Multiple state variables per synapse

Example Novel Algorithms Supported by Loihi

-Artificial Olfaction / Spatiotemporal Attractors

-Sudoku / Constraint Satisfaction

-Path Planning / Graph Search

The Nx System Framework

-heterogeneous hierarchical parallel system

-event-driven communication over channels

-localized state

-models describe emergent behavior

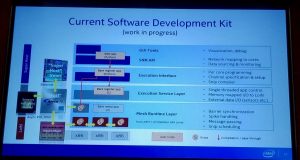

Still building out the software development kit (see pic)

“There are no tensors in this system. Tensors are not the right framework for this event-driven spiking system.”

Intel Neuromorphic Research Community

-we wish to engage with collaborators in academic, government, industry research groups

–remote access to Loihi systems, SDK, SW

–Loaned Loihi system and bare chips

-opportunity for limited funding (RFP available late march)

Q: What memory space does the onboard CPU have access to?

A: Almost all node state is available to onboard CPU. 128kB of synaptic memory, bits dividable up however you want

Thomas A Cleland

Neuromorphic Algorithms Derived from Biological Olfaction

Biophysical modeling has revealed key design principles

Computational elements: compensatory or algorithmic

Algorithmic elements are potentially valuable

-the ‘hard problem’ of olfaction

-the generality of this “hard problem’ beyond olfactory applications

Inhibitory surround in arbitrarily high dimensions (2006)

Weight matrix is learned;not physicochemical

inhibition affects MC spike timing

implementation on Loihi as I speak

Design principles of interest

-deeply, embarrassingly parallel

-both excitatory and inhibitory synapse; loops

-spike timing based computation;gamma clock

-online selective synaptic plasticity

—>spike timing based learning rules

—>low interference–>new learning does not disrupt prior learning

—>latent capacity via adult neurogenesis

—>Neuromorphic design: constraint through structure can increase computational efficacy to resolve real-world problems

embedded prior: need not reinvent the wheel during learning (anatomy is parf of the algorithms)

resource-limited, low-n problems

The hard problem of olfaction, and its generality

—>how do you identify the existence of an oodor when its not more or less concentrated than other odors

Odorants interfere with one another by competing for receptors

Odors (sources) cannot be identified efficiently from mixed inputs based on feedforward processing along

hypothesis: the key is prior learning

Homeomorphic/diffeomorphic transformations

categorical learning generators sparse representations of sources, rending the hard problem theoretically tractable

occluded, noisy signals need to be efficiently attracted towards learning source representations

the blessing of dimensionality protects against destructive interference

core principles of implementation

-preprocessing

-generation of orthogonalized representations of complex, diagnostic input patterns via statistical learning

-selective deployment of those orthogonalized represetnation as inhibition onto the afferent input stream

-generate attractors to these learning signal engrams

-interpretable learning

without learning, odor representations are stationary

stronger activation leads to a phase lead with respect with the gamma clock

without learning, noisy signals are also stationary

Chris Eliasmith

Mike (Intel) approached Chris and asked if there were any applications he could port to chip

Not much time, 2 months, debugging tools non-existent

Implemented some basic demos just in time

Seemingly good results with adaptive motor control

Sebastian Schmitt

Heidelberg University

Experiments on BrainScaleS

20 cm wafer, 180nm CMOS

main PCB

48 kintex-7 FPGAs

Power Supplies

Aux Boards

in development for >10 years

Heart is an analog neural circuits

-dedicated circuits in every neuron for:

–>resting potential

–>reset potential

–>threshold potential

–>reversal potentials

–>refractory period

–>membrane time constant

–>synaptic time constants

–>adaptaation

–>exponential term

-Leads to accelerated dynamics

HICANN: high input count analog neural network chip

-512 analog neurons, 110000 plastic synapses

-digital communication

-sparse crossbar switches connecting busses

-analog parameter storage

-postprocessing

configuration

-large configuration space per HICANN

—>10000 parameters

-floating gate analog storage

-both programmed via a DAC

calibration

-analog circuits are subject to process dependent device mismatch,

-for same value of supplied parameter, the neuron response varies

Sek Chai

SRI International

Adaptive Architectures

Bit-Regularized Optimization of Neural Nets

-This talk is about meta-learning, and in particular, fast training using bit-precision as an additional hyper-parameter

-Allow bit precision to be a floating/trainable parameter

—>bit penalty added to loss function

Learning converges faster compared to static precision

runs with smaller memory footprint

supports faster learning rate than standard neural network

can outperform standard neural network

Leave a Comment