“Look deep into Nature, and then you will understand everything better.” –Albert Einstein

What is Knowm?

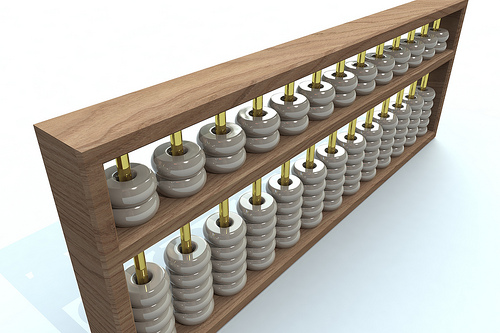

As computer builders, humans have come a long way. We have gone from machines like this:

Image by StockMonkeys.com

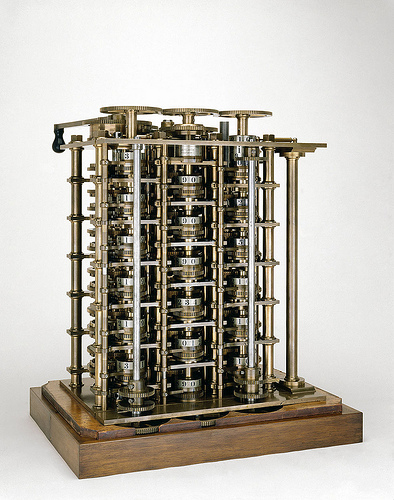

To more complicated and sophisticated mechanical machines like this:

Image by Science Museum London

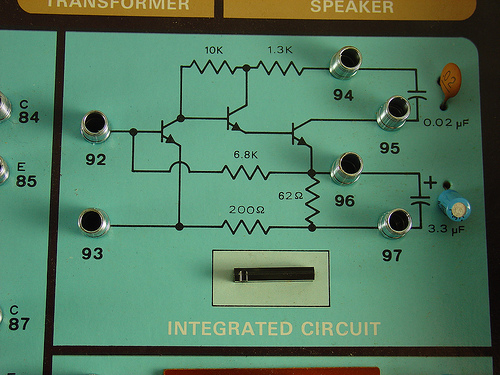

all the way to the incredible integrated electronics of today:

Image by JamesIrwin

Have you ever stopped to marvel at how amazing it is we can build a machine with a billion or more parts, all in their correct place and all doing exactly what they should be doing? While the degree of precision and complexity in modern integrated circuits is impressive (to say the least), it is based on a certain mindset that is so ingrained in our psyche that we are mostly unaware that another way exists. A way that is about to change the face of computing as we know it. Confused? Let’s back up.

What is a machine? We all know that a machine is built of parts, and by putting the parts together in various ways we can build large and complex functioning objects like a car or a plane or an integrated circuit. Now imagine that we removed all human-built machines and artifacts from the world. Every man-made artifact ever created, all machines and tools and parts and pieces just disappeared. What would be left? Lots of plants and animals and fungus and grand rivers and forests. Now let me ask you a question: Are there any machines? You may say ‘no’, and I would not blame you. After all, an animal isn’t a machine. Or a plant. Or a river. These are not machines…right? Not so fast. Our cars are machines and they can move us around. But so can biological bodies. Our computers can run algorithms. But so can brains. Our planes can fly. But so can birds. A backhoe can dig. But so can gophers. If what we see in Nature solves the same sort of problems we solve with our machines, why are they not also machines? Let’s call them “Natural Machines”. Now it’s clear that something is very different between a machine and a Natural Machine. Let me show you with a simple thought experiment. We start with a machine and a Natural Machine:

Image by docentjoyce

Now, let’s put each one in its own box, which is impervious to energy flow. Nothing gets in or out. No air, no heat, no light. We let each box sit there for a month or so and then we open the boxes. What do we find inside? The answer is fairly straightforward. When we open the box with the machine we find it just as we left it:

When we open the box with the Natural Machine we find it has started to disintegrate or fall apart:

Image by Magic Madzik

Why is this? Because the Natural Machine is alive you might say. Well, yes…but this does not really answer the question. It just shifts the question to: What does it mean to be alive?

One of the founders of Quantum Mechanics pondered this question. In 1944, quantum physicist Erwin Schrödinger published the book What is Life? based on a series of public lectures delivered at Trinity College in Dublin. Schrödinger asked the question: “How can the events in space and time which take place within the spatial boundary of a living organism be accounted for by physics and chemistry?”. Coincidently, Schrödinger was also famous for his thought experiment of putting a cat in a box. Speaking of that, lets get back to our boxes…

Why did the machine survive the experiment but the Natural Machine did not? Because the Natural Machine requires flows of energy with the environment to persist. Our Natural Machine ran out of air (or water) and its internal self-repairing process shut down. From this moment on, it was no longer a Natural Machine but just a pile of matter in the process of spreading out. This is the big clue. When the Natural Machine is prevented from exchanging energy with its environment, it dissolves. The machine was not affected by the box, so it must not be dependent on such energy flows. In fact, it is almost exactly the same as when we put it in the box. To describe this property we will borrow a word from chemistry and computing:

Volatile (Chemistry): A measure of the tendency of a substance to vaporize

Volatile (Computing): Memory that lasts only while the power is on (and thus would be lost after a restart)

We will call the Natural-Machine volatile and the Machine non-volatile. A volatile object requires constant energy dissipation to maintain its state. Take the dissipation away and the volatile object ‘vaporizes’ or ‘loses its state’. We have arrived at one of the most deceptively simple and powerful laws of physics: the 2nd Law of Thermodynamics. The 2nd Law is simple. It states that in a closed system (no energy flows), more probable things happen. If there is only one way for a system to be in configuration X but 1,000,000 ways for it to be in configuration Y, odds are good its going to be in configuration Y. The ‘closed system’ caveat is important because it allows us to ‘bound’ the system we are interested in and write down the mathematical equations that govern the number of possible configurations or states. In our box there are many more ways to spread the atoms of the Natural Machine around the box in a ‘disordered’ way than another way (like a cat). And so, over time, more probable things happen.

Schrödinger postulated that living matter evades the decay to thermodynamic equilibrium by maintaining ‘negative entropy’ in an open system. That is, the reason the Natural Machine does not dissolve is because it is dissipating energy or exporting entropy into the environment to counteract its own decay. Somehow the Natural Machine is able to hijack the 2nd Law and use it to power its own growth and repair. If we can figure out how this hijacking occurs we will posses something truly remarkable: We will be able to build machines more like Natural Machines. Why is this important? Because your brain is a Natural Machine, and it is at least nine orders of magnitude more efficient at learning than our computing machines are today.

To understand how this hijacking occurs, it is important to realize that Natural Machines are not the only things to have figured out how to use 2nd Law for their own bidding. Our machines do this everyday, for example in the form of internal combustion engines. When we burn fuel, the hot gas wants to spread out and consequently exerts a force. We capture this force with a piston, which we couple to the stuff we want to move:

In other words, we extract work from the 2nd Law by using an adaptive container. We contain matter that is trying to spread out with a container that can adapt or change, but only in a certain way. By reducing the degrees of freedom available to the adaptive container, we channel the force and put it to work. In the case of the piston, we make the only viable option for expansion the one that pushes the piston up and engages the crank-shaft. This all makes sense, right? But how do Natural Machines do it? We certainly do not see little internal combustion engines in Nature, right? It turns out that we do! They are ubiquitous. You have seen them thousands, millions or even billions of times just today. You have seen them so many times you are probably blind to it. Our job is to prepare you to see it, and the only way to do this is to explain how it works. Don’t worry, this is not complicated. If you understand how a piston works you will understand what we are about to show you.

Let us first start with another type of Natural Machine: A tree. Trees are wonderful Natural Machines that combine water and minerals in the soil with sunlight and CO2 in the air to fuel their own growth. Let’s focus on the water first. It’s sitting in liquid form in the ground where it is taken up by the roots, flows through the trunk and branches and exits the leaves, where it evaporates into a gas in a process we call evapotranspiration. Liquid to a gas, just like a steam engine:

http://youtu.be/zjl_Psx3RuY

[Alex captured this video from a documentary online but forgot which one. Any help identifying the original is appreciated!]

A tree is an energy-dissipation pathway that facilitates the flow of water in the ground to gas in the air. It is an adaptive container for the flow of water. When the water reaches the leaves it is used by the photochemical process we call photosynthesis in the plant’s chloroplasts. Sugars are created. These sugar molecules flow down the branches, into the truck, and down through the roots in an opposite energy-dissipating pathway.

A tree is an energy-dissipation pathway that facilitates the flow of sugars from the leaves to the ground. It is an adaptive container for the flow of sugars. Let’s dwell on this for a second. Water going up, sugars going down. Both are energy-dissipation pathways. Recall our previous observation that a Natural Machine must not be cut-off from energy flows with its environment. Perhaps we could say it differently: A Natural Machine is one (or more) energy-dissipating pathway. This is an important distinction. It is not that a Natural Machine dissipates energy so that it can do something else. A Natural Machine is an energy-dissipating pathway. If this is the case, then we should be able to take a look at simpler energy-dissipating systems and study them. Let’s take a look at electric charge dissipation, a.k.a lightning, at super DUPER slow motion:

Notice a similarity to the tree? This is not an coincidence. This is what energy dissipating itself looks like. To which you might say something like: No its not! What about this:

Image by mightyohm

After all, those wires and transistors and capacitors are dissipating energy and they look nothing like a tree or lightening! We of course agree with this. But we said dissipating itself, with emphasis on ‘itself’. That circuit (the dissipation pathway) was created by a human. The electrons are traveling from a source to a sink through a pre-set circuit of resistors, capacitors, inductors and transistors. There is no place for the electrons to go except through it. What would happen if, for example, the wires could grow themselves? That’s what a tree is doing. And the lightning. But how would this even work?

Let’s return to the lightening. Have you ever pondered how ‘alive’ it seems? It’s born with a flash and dies with a boom, all in the blink of an eye. When you look at it in slow motion, it’s almost as if it’s exploring its world, searching for something.

The lightning sends out branches in many paths. Some branches are terminated early. Others grow and further branch. It goes on like this until one of the branches finds the ground. At this point all the charge is channeled into one path and the other branches quickly decay away. Because lightning is so fast and its death so bright we all miss the really interesting part where it’s actively searching for the ground. This purely natural system of energy dissipating itself is making choices about what pathways to explore and what pathways to terminate. Think about this! If we can figure out how this process works, we could exploit it.

Lightening is searching the air for ground. Roots are searching the soil for minerals and water. Branches are searching the sky for light. They are all searching. Searching is an important problem in computer science. It turns out that many problems can be reduced to search problems. These problems involve some set of constraints and finding some configuration that satisfies the constraints. One of the ‘purest’ form of such problems is the Boolean-Satisfiability problem. One algorithm for finding solutions to the SAT problem is called the DPLL tree search algorithm and it looks like this:

![]()

Perhaps we could co-opt nature’s desire to dissipate energy and use it to solve problems? How cool would that be?! Well that’s exactly what we are doing at Knowm Inc, and it turns out we can do a lot more than search problems. While the DPLL example is illustrative, it turns out the rabbit hole goes really deep! We can build systems that perceive, solve puzzles and move bodies. We can recognize patterns, make predictions, detect anomalies and move robotic arms. This is what the theory of AHaH Computing is all about. We discovered a fundamental adaptive process common to Natural Machines and then figured out to build our own with a new type of device called a memristor. A memristor is the electronic equivalent of an adaptive container!

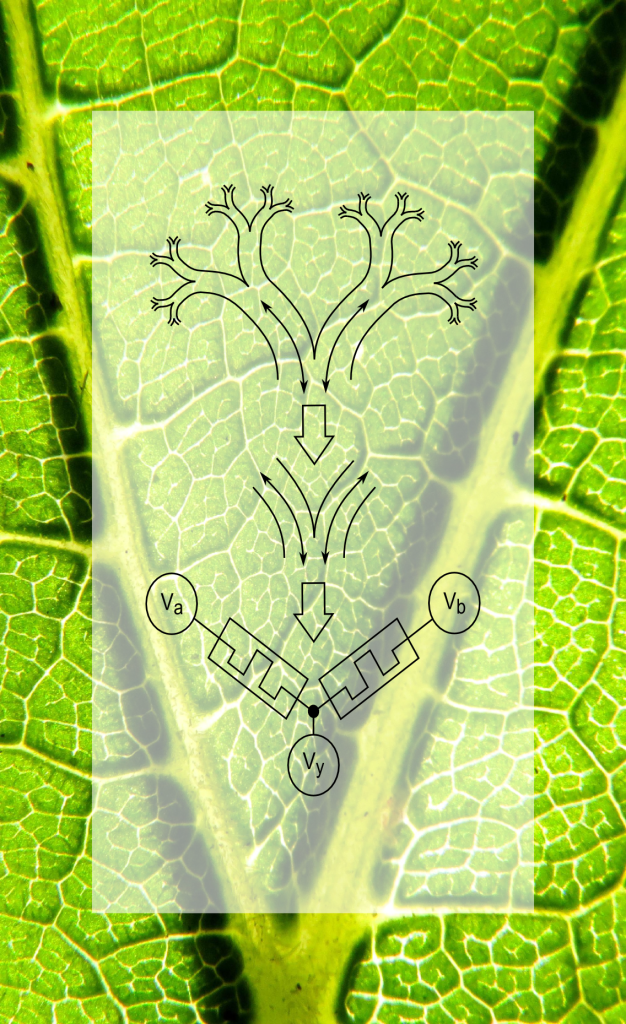

AHaH stands for “Anti-Hebbian and Hebbian” in honor of Donald O. Hebb, who made a famous postulate on how learning (plasticity) occurs in the neurons of our brains. We discovered that the physics of energy dissipating in adaptive containers leads to AHaH plasticity, and we found a number of ways to get this processes into a memristive circuit. Before we show you how this works, let’s take a look at a Natural Machine again, and this time let’s look carefully:

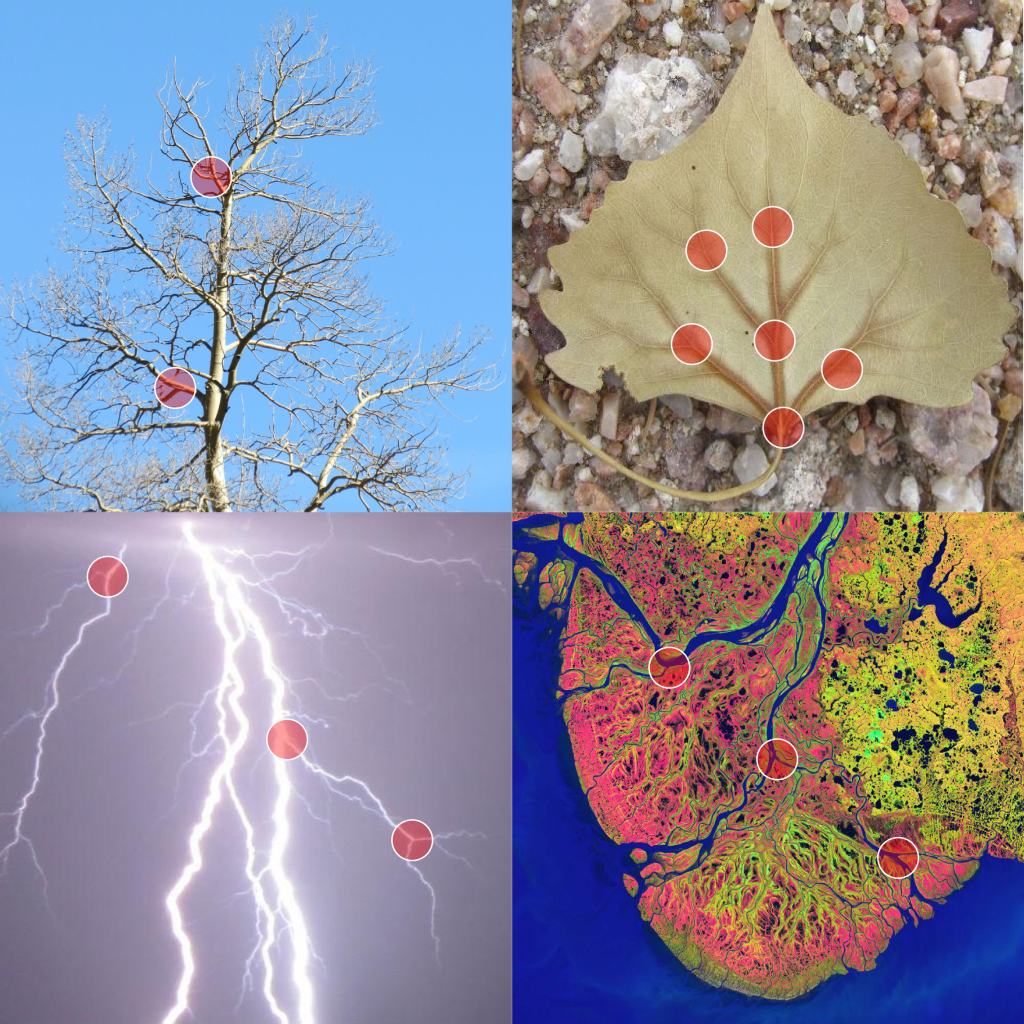

Here we have four Natural Machines: A tree, a leaf, a river delta, and lightning. Can you see how, in each case, they are built of the same repeating building block, over and over again? It is a simple bifurcation that we call “Knowm’s Synapse” or “Nature’s Transistor”. Two competing energy dissipation pathways. As electricity (or water or sugar or whatever) flows through some branch it eventually comes to a decision point. A fork in the road. Some of the energy flows through one fork, and some down the other. As it does this, the adaptive container of that flow changes. If the two dissipation pathways compete for conduction resources then congratulations-you now have a universal adaptive computational building block.

Imagine you are a particle in the high-pressure container of (A). Thanks to the 2nd Law you will probably end up in one of the connected chambers. Which one you end up in is a function of what channel (Ga or Gb) is larger. For example, if Ga is really small and Gb is really big you will very likely go through Gb, as seen in (B). You could say that the particle “chose” the Gb path. What is interesting is what happens to the containers. Since Gb was larger (more conductive), more particles rush into the B chamber. If no additional chambers are available, it will quickly fill up, as seen in (C). This causes the pressure difference across the Gb pathway to reduce faster than the Ga pathway. This, in turn, causes the Ga pathway to increase and the Gb pathway to decrease because the force trying to pry open each channel is proportional to the pressure difference. The higher the force and the longer it is applied, the more work can be done. If we ‘reload’ this system and try again we will find that Ga and Gb are more balanced. Rather than a particle choosing the Gb path we find it just as likely to choose either path. We call this change Anti-Hebbian learning. On the other hand, if new chambers or sinks open up in the B chamber, the largest pressure differential will be across the Gb channel and the change will be just the opposite. We call this Hebbian learning. For a more detailed accounting of all of this, see our PLoS ONE paper.

We have only scratched the surface, but so far we have demonstrated solutions across many domains of machine learning. And it all boils down to this: We can build Nature’s Transistor from memristors because memristors are adaptive containers. As a voltage (pressure) is applied to a memristor, its conductance will change.

We are now at a place where we can finally answer our question: What is Knowm?

What we refer to as ‘Knowm’ is the thing that is all around us but nobody really notices. Today you have probably seen it hundreds, thousands or even millions of times. It is responsible for most self-organization on this planet. Nature is built of Knowms, including you.

Knowm is built of a simple part repeated over and over again. We call this part Knowm’s Synapse. It is a point of bifurcation or a branch, where a particle must go one way or another–the “Y”. It is created when two energy dissipation pathways are competing for conduction resources. It appears to be at the heart of most self-organization.

You really have to hand it to Knowm. It is everywhere and yet many people can’t see it.

Knowms exists on huge spatial and temporal scales. You can see it from space in river tributaries and deltas:

Image by Nelson Minar

When energy (for example water) flows through an adaptive container (for example dirt), the medium adapts or erodes in a particularly way that causes the energy to be dissipated faster. For example, the water erodes the ground and causes a channel to grow, which lowers the resistance to flow.

Knowm is an energy dissipating flow-structure and hence it is also “temporal”. It is born, lives for awhile, and dies. As you have already seen, a good example is lightening:

Knowm is everywhere and almost everything. It is in so many places, it is sort of weird nobody really talks about it. It is in the mycelium networks of fungus, slime mold and and multi-cellular bacteria. Knowm is under the sea:

, on the side of the road in New Mexico:

and in the leaf of a plant:

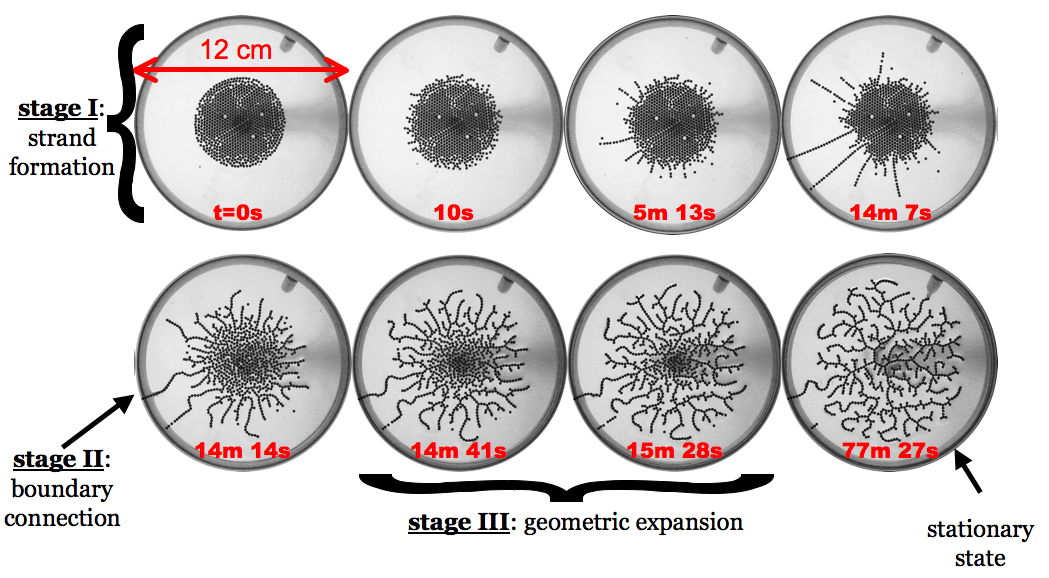

Electricity can build Knowms. If you apply a voltage to a non-conducting liquid with conducting particles, as Alfred Hubler did, they will spontaneously organize into a Knowm:

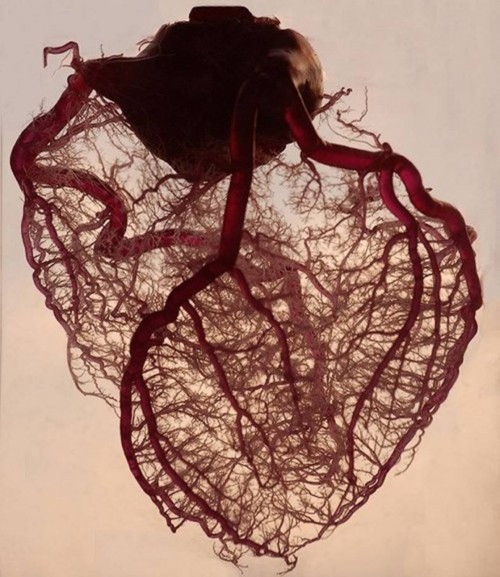

Take a deep breath and contemplate just how pervasive Knowm is. Consider that your lungs are a Knowm too:

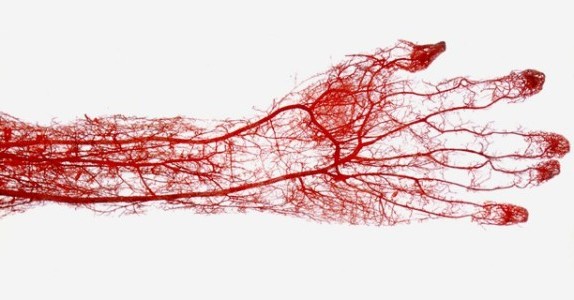

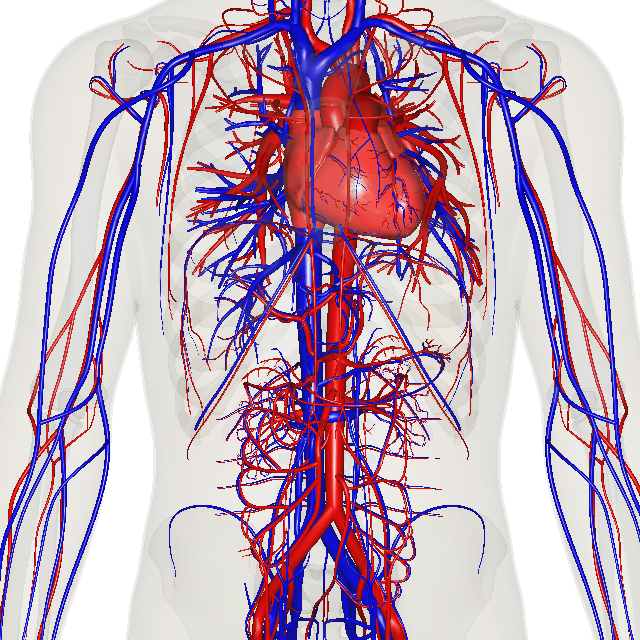

And your whole circulatory system of course.

Right about now we hope you are having the “OMG is everything is built of Knowms?!“ epiphany. How could so much of the world be built of Knowms and yet nobody really talks about it? Well, some people are! Adrian Bejan has postulated the Constructal Law and has used it to understand a lot of cool stuff. Perhaps the idea that we can understand how Nature self-organizes is threatening to some people. Perhaps people just don’t know that we are making progress at understanding it and have actually found ways to put it to use. Perhaps its because many of us live in cities, far from Nature, stuck in buildings and cars and roads and sidewalks. But Knowm is still there, peeking through the cracks:

The question any creative and curious person may be asking right now is: how can we harness Knowm to do stuff for us? Perhaps something like this:

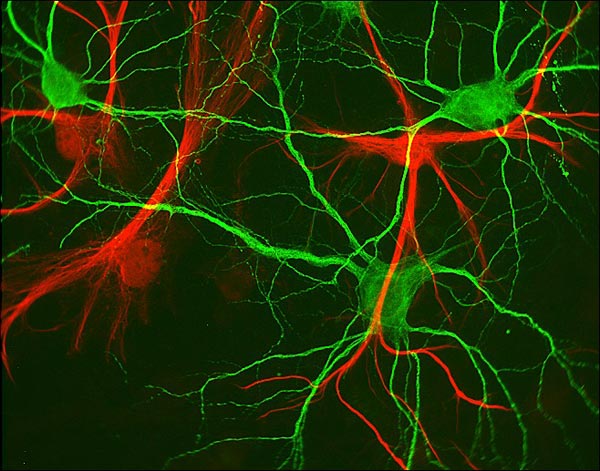

The applications for Knowm and Knowm’s Synapse are everywhere, and understanding how Knowm works is going to have far reaching implications for all sorts of technology. None more than our computers. Because brains are built of neurons, and neurons are Knowms too:

Our understanding on how to use Knowm’s Synapse to emulate brain functions goes a lot deeper, and you can learn about how we are using memristors to reshape what is possible in computing by reading our PLoS ONE paper AHaH Computing and then helping us build and expand kT-RAM and the Knowm API.

4 Comments

Memristor technology brings about an analog revolution | Datacentre Management . org

[…] now we’ve seen during slightest one startup, Knowm Inc., pioneering a shining new form of computing that leverages memristor record to not usually insist […]

Paul Bassett

Wonderful essay on the principles underlying self-organization in Nature. Back in the late 60s I did my MSc in AI based on perceptrons, and Minsky and Papert’s book on the subject was my starting point. I was able to prove that lower-order perceptrons could learn to solve higher order polynomials, a result that surprised Minsky.

Stu Kauffman’s work on self organizing systems (e.g., his book: “At Home in the Universe”) convinced me that self-organization was the missing ingredient in explaining how complex systems evolve from simpler ones. For me, your essay is the icing on that cake!

Good luck in bringing memristors to the marketplace and using them to revolutionize computing. I will be watching with baited breath.

Paul Bassett, inventor of Frame Technology

Terry Hill

I found this truly fascinating, and as a sociologist immediately focused on the question: Is it possible that MULTIPLE natural machines (i.e., humans) in concert, can, unknowingly exhibit memristor properties? If trees for example, cooperate with other trees to survive, do humans? What is our collective Knowm? It is obvious that a single human is a natural machine as you describe, but are societies? Intuitively I would say “yes”, but humans, unlike other natural machines have a seemingly built-in proclivity for self-destruction as well as for survival. And we do this at the individual and especially at the social, level. And ‘consciously’ (deliberately, from stances of reason and emotion).

Alex Nugent

Terry,

The pattern (so far as I can tell) that is occurring in Nature at all scales is that a particle is flowing through the assembly that is either the direct carrier of free energy (electrons, for example) or else gates access to local free energy reserves of the units of the collective. So at the scale of human civilization I would guess this particle is “money”. If its like everything else, the sociological flow-systems should organize to channel the particle in hierarchies.